T22 uses a technique that has no name, because I made it myself (though others probably tried similar things). Or well, I copied ideas from existing techniques, mangled it with my own countless attempts, used some other advice, and added some poohah on top. But if I had to give it a name... "Ambi-Volume-Texture-Cone-Tracing"? Doesn't sound very neat... Anyway, let's explain how it works. Oh, and don't expect a perfect solution. It doesn't look as good as Crassin's VCT (Voxel Cone Tracing), although you could say they "cheated" a bit with powerful computers and a simple scene. Sponza Theatre a simple scene?! Sure, it has quite a lot polygons, but it doesn't have very thin walls (sensitive for light-leaks), and above all, it's just a single scene. In practice, game-worlds are much bigger. For example, you can't bake a whole GTA city into the (GPU) memory, so you have to chop the world in chunks and only process the geometry that is nearby the camera. That is possible with VCT, but updating the Octree that is required for this technique is very expensive.

In practice, all realtime G.I. solutions have problems. Either they won't work very well with moving objects (imagine a door closing & blocking light) / destructible geometry, they only work on fixed small worlds, they require too much power, lack accuracy, or just look terrible. Or all of that. Pre-calculated solutions on the other hand are much faster and offer better quality, but well, they aren’t realtime. That means the lighting won't adapt for shit when the environment or lamps change. Unless you exactly know which lights will change and pre-calculate multiple situations. Don't know how GTA V handles G.I., but I can imagine they bake the lighting for a couple scenario's (day, night, cloudy, ...), based on dominant lightsources such as the Sun, Moon, and pre-defined (street)lights.

For T22 I'm urged to fall back on pre-baked lighting as well. Simply because it looks better, performs better. And an often forgotten argument; it gives a better degree of control. Especially in an unreal horror game like this, you want to play with atmospheric settings. Make rooms much darker than they should be, use high contrasts, or add a stinky orange ambient to a corridor while there are no orange lamps at all. With realtime G.I., it's hard to tell what the result will be exactly. Especially if the results aren't exactly 100% accurate either. Sometimes it looks good, sometimes it doesn’t and you’ll be adding cheap tricks, completely bypassing the (expensive!) G.I.. But nevertheless... making realtime G.I. for games is as attractive as finding the magic recipe for Gold. It's a prestige thing.

Sponza Theatre, not rendered but a real photo for a change. Notice the whole structure is litten more or less, even though the light only comes in via the open roof. That's the kind of lighting we want to achieve. Realtime.

Not quite yet the real thing, but still one of the most promising realtime G.I. attempts so far, Voxel Cone Tracing by Cyril Crassin. Seems UDK 4 is adopting this technique as well.

Voxel-Cone-Tracing convinced me again that realtime G.I. is possible, and with good results. So I gave it a try. The idea in a nutshell:

1- Voxelize geometry - put geometry in octree (in GPU memory, using Compute Shaders)

2- Light voxelized geometry - add outgoing light in octree cells

3- Mipmap octree

4- For each pixel on the screen, sample bounced light with a couple of cone-shaped rays from the mipmapped octree

1- Voxelize your geometry

Like making a lower-resolution Lego/Minecraft block variant of your scenery & objects). Store those blocks in an Octree. Octrees provide storage and searching trees for 3D data. To avoid needing tons of memory, the octree will reduce resolution over distance (thus bigger blocks for distant geometry).

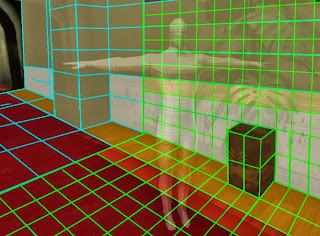

Excuse the lousy drawing, I was too lazy to get the perspective right. But you can see how a scene would get "voxelized" roughly. By rendering the "whole" (whatever could affect the GI in your view) scene in slices, you can get the whereabouts of your geometry and their properties such as normal and diffuse/specular/emissive colors. Note that I skipped the plant leafs and puppet. Of course you can voxelize those too, but be aware! It would constantly change the Octree as the puppet moves.

Why Voxelize & Octrees? VCT is based on Raymarching. Any given pixel on the screen will sample incoming light by sending some (cone)rays into the scene. We need to check where our rays intersect geometry. Octrees are a sufficient way to quickly test collisions. Memory is limited though, so we can't store each and every tiny molecule. Instead we store bigger blocks and use an octree so we only reserve memory for locations that actually contain geometry, rather than each and every cubic meter. Once we have an Octree, we can insert geometry data, light fluxes, and other data we may need to know later on.

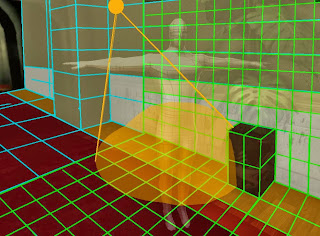

2- Compute the direct lighting on those voxels

As you would normally apply lights on 3D stuff, you compute the direct lighting for every voxel. You calculate how much light a voxel would reflect back in the world ("diffuse light"), so another surface can pick it up again (indirect lighting).

Papers never explain how they handle big amounts of light, usually you only see 1 or 2 big lightsources, that stupid Cornel box, or an outdoor scene with the sun only. But you could handle multiple lights of course, and the good news is that there are relative little voxels (unless you use very fine resolutions). For each octree cell that contains geometry, you calculate the incoming fluxes from the 6 main directions. Eventually you could use Spherical Harmonics to reduce the amount of floats needed to store this information. So, now your octree cells contain this info: geometry yes/no (or density%)? Diffuse RGB reflected into the -X, +X, -Y, +Y, -Z and +Z directions. In other words, you have a low-res representation of your world, directly lit, into your video card memory. This is updated each cycle btw.

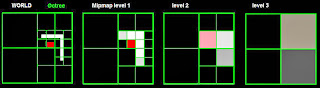

3- Mipmap the octree

On a 2D texture, Mipmapping means you half the size and for each new pixel you take the average of 4 pixels from the original resolution. On a 3D texture, the idea is the same except that you take pixels from layers above and/or below into account as well. And well, you could do the same for an octree. When constructing an octree, you subdivide a cell into 8 smaller cells if it contains anything of interest. So you can do the reverse thing as well. 1 to 8 Minecraft blocks get replaced with 1 bigger Minecraf blocked, made of the averages.

Why we need to Mipmap? So we can "cone trace". See next step.

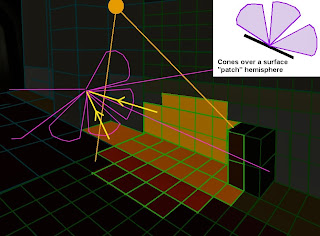

4- For each pixel on your screen, sample indirect light with Cone Tracing

The key of G.I. is to gather light coming from all directions, bounced off by other surfaces. Simple theory, but extremely hard to do in realtime. A major problem is that we need to sample light from an infinite amount of directions. Imagine you were a piece of concrete wall; everything you can see around you is reflecting light towards you.

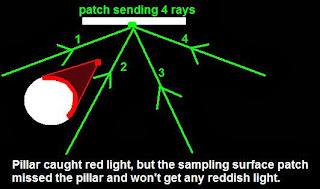

At least every pixel on your screen needs to gather this information, but we can only use a very limited amount of rays per pixel, as raytracing or raymarching is expensive in general. Just using 16 rays for example will lead to “undersampling”, as you might have missed vital parts from the surrounding scene.

We can reduce this a bit by letting each pixel look in slight different directions and blur the end-results. But the results are still grainy (as you often see with older 3D software), and you need an awful big fucking amount of rays to get real proper results --> slow.

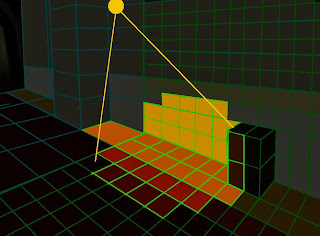

The idea behind Cone-Shaped rays is that you only need a few rays to sample a lot. For each couple steps that the ray marches forward, we look into a lower resolution of the mipmap we made in step 3. So, as we travel further and further, we sample averaged light from bigger blocks in the octree. Of course, the accuracy will suffer, but it's an efficient way to avoid missing important lightsources & win speed. After all, we need a realtime solution, and big speed drops won't justify the few benefits we gain compared to much faster(and nicer, and easier) pre-baked solutions. Plus, fortunately, G.I. means "low frequency lighting" , so the result is a blur of many incoming light fluxes anyway. For an ordinary spectator, it’s hard to figure out how G.I.should look so its forgivable to make errors… usually.

By sampling from lower (averaged, blurrier) levels over time, we get a cone shaped ray.

So, for each pixel on the screen will fire a couple of those "cones". We check where the cones hit geometry in the mipmapped octree, and then sample the light that was bounced into our (global) direction. The advantage of screenspace techniques is that we sample for all pixels visible on the camera; nothing more, nothing less. Eventually we can do it on a lower resolution to get a significant speed gain. And then upscale the image with some smart blurring finally. Another advantage is that any pixel on the screen can gather light, including objects that weren't involved in the GI pipeline so far.

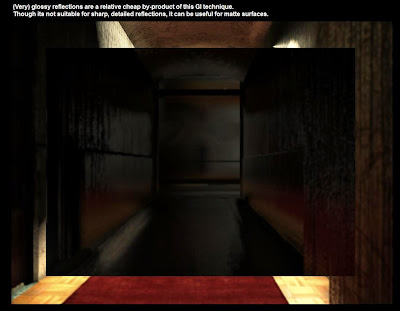

Glossy reflections

A cool feature of VCT is the ability of adding 1 extra ray to sample specular lighting, giving glossy reflections. The cone-angle would depend on the material glossiness. Highly specular surfaces would use very narrow cones, meaning that we keep sampling from the higher-resolution mipmap levels for a longer time, leading to less blurry results.

T22 doesn't use VCT, but the same technique can be used for sampling glossy reflections. Advantage is that it works on any surface, and it can sample behind the camera. Disadvantages are the low level of detail and the lack of G.I. in the portion that gets reflected; voxels that were not directly lit, won't appear in the reflections either.

================================================================

T22 Adjustments

Cool and the Gang. But I said T22 doesn't exactly use VCT. What is wrong with it then? Well, 4 things:

A: The octree requires a lot of memory (you can win a lot by reducing the level of detail though)

B: Updating the octree is very costly

C: Traversing the octree to sample data during the raymarch isn’t that fast

D: Goddamn difficult to implement correctly. I might be too dumb to do it all right.

The Octree is needed to store the world representation, required for raymarching and gathering light. It can help speeding up the raymarching, and moreover, it’s pretty much the only way to store a lot of geometry up to a relative detailed level. But at the same time, this octree is the Achilles heel of VCT, coding it is nasty, and maintaining the octree is expensive, especially when having to deal with big, roaming worlds. Another in-detail issue is sampling. When doing ray-marching, we have to dive into the octree and check from which cell we are sampling on any given XYZ position. It’s not that bad, but there are faster ways.

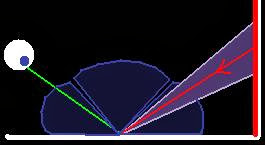

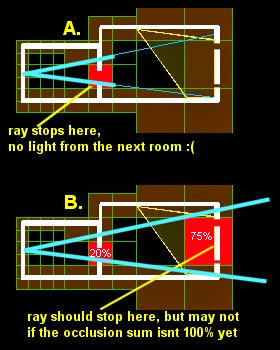

When I finally got VCT "working" in T22, the framerate crumbled from a lousy ~18 fps to a terrible ~4 fps. Some nuance, my code isn't very well optimized probably, and it runs on an old 2008 laptop. Anyhow, such a low framerate is unacceptable, and the results weren't exactly great either. The problem arises when mipmapping. Tiny blocks are combined into bigger blocks, but what if 50% blocks are "vacuum" (not filled with geometry), and the other half are walls, floors, or whatever? I decided to give a density value to cells. Now later on, when raymarching, you have to decide where and when to sample. If we hit a 50% occluding block, should we immediately stop sampling, or continue until the sum of occlusions is 100%? The first option prevents light-leaks, but it doesn't work very well in narrow environments. Rays will get stopped at narrow passages (doors, windows, ...), so light from a bright corridor won't get into a darker room. That is exactly NOT we want to achieve, G.I. should bring light to darker places!

Option B isn't optimal either, as it may happen we stop too late (or not at all), and thus gathering light from places we can't actually see. The VCT demo's probably hides this by using a high resolution Octree, sufficient rays per pixel (the less narrow the cones, the less errors), and who knows what else.

Got to mention that the same light-leaking problem occurs in pretty much any grid/cell based solution. CryEngine "Light Propagation Volumes" has this problem, and so does T22's "Ambi-Volume-Texture-Tracing" or whatever you want to call it. At most times, this isn't that much of a problem since it's hard to tell whether the G.I. is incorrect anyway. But at some points, it leads to undesired situations. As said before, you have little control over realtime G.I. so masking such errors can be nasty. And if we are so busy with powders and creams to hide ugliness... shouldn't we just drop the whole technique then? I wouldn't be surprised if games like Crysis actually (partially) did.

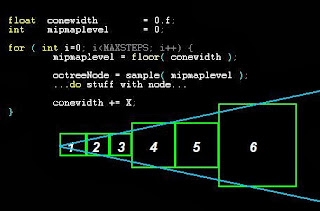

Anyway, what we did do in T22, is simplifying the whole VCT process and getting rid of octrees. I picked up something I did before, voxelizing the world into 3D textures instead of a complicated octree, and then adopted the cone-trace idea to fix undersampling issues by mipmapping the 3D textures. So the pipeline is pretty much the same as VCT:

1- Voxelize geometry

2- Light voxelized geometry - put results in 3D textures

3- Mipmap 3D textures

4- For each pixel on the screen, sample bounced light with a couple of cone-shaped rays from the mipmapped 3D textures

A whole lot of effort for a bit of blurry light smeared all over the corridors...

The advantage of 3D-textures is that you can directly insert voxels on the right places bby simply using their 3D world positions. Same thing for reading the textures, all we need is a coordinate. And because the march sequences usually read pixels located at the same spots, we benefit from hardware caching. Raymarching through a 3D texture is faster than traversing an octree, and much easier to work with. But it also has disadvantages: 3D-textures consume a lot of memory. This will force you to reduce the level of detail (thus bigger voxel lego blocks, less sharp reflections, bigger hit detection inaccuracies), and keep the boundaries close. That means the 3D textures will only cover a limited (cubic)space around the camera. Distant geometry must fall back on other GI methods.

Likely, 75% or more of your world will be vacuum, unfilled space. With octrees, you don't sub-divide cells that don't contain any geometry, keeping the memory consumption low for open spaces. With 3D textures, each cubic meter will require the same amount of pixels, whether it’s filled with geometry or not. Could be devastating for large/outdoor scenes, but note that you can stretch 3D textures over a wider space if needed. When the geometry is stretched over a wider area, you can generally do with a coarser grid as well.

Well, T22 will be indoor mostly, so we aren't dealing with huge spaces. Yet it's still possible we may look beyond the area covered by the 3D textures. So what I did is baking the GI into the geometry vertices (note that the world is tessellated to some degree). Lower-end computers or distant geometry will use the pre-baked GI. And otherwise we compute it realtime. Or both. Medium-end settings will add a realtime bounce with a pre-baked bounce. So that makes it half realtime. Or something. Sounds cheap maybe, but hey, got to think of something. Also VCT would eventually bump to its limits, as you can't make a super-octree that covers the entire world. I believe the relative small Sponza Theatre uses many hundreds of megabytes (or even over a gig) video memory already, though that is a highly detailed octree. Anyhow, you need a back-up (also counts for Light Propagating Volumes).

Another change, or actually cheap hack, I made was in the voxelizing process. Rather than voxelizing the scene each cycle, I pre-calculate the voxels for all geometry. Each chunk of the world has its own array of pre generated voxels (voxel = a position, color, normal, ...). Also dynamic objects have pre-calculated voxels. As the object moves or rotates, the voxels will transform with it. Note that small objects don't have voxels, only objects big enough to really make a difference in the lighting, or doors that block light from another room.

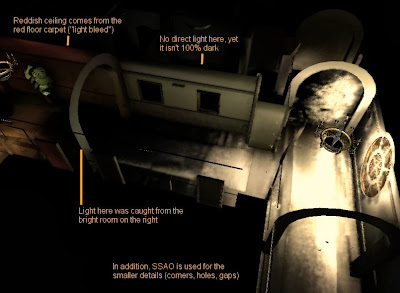

The final raymarching step works pretty much the same as in VCT, except that we sample directly from 3D textures (much simpler). Each few steps we march, we sample from a lower detailed mipmap level to get the cone-effect. And we can also make glossy reflections if we like, although the sharpness isn't as great as VCT's, since the 3D textures contain less detail than octrees. Despite the speed gains, this process still requires a lot of horsepower. So I do it on a much lower resolution. The situation is somewhat workable on my old laptop, but the result is too blurry. You don't see it that much in bright areas, but darker parts that don't catch direct light are smudgy.

Note how the red carpet reflects on the walls. Another nice thing about screenspace techniques is that it will also work naturally for decals we pasted on top of the geometry, such as the top-right hole.

Too bad the buffer is blurry though. Possibly I could get better quality by using a smarter upscale process (UDK does that for their VCT variant), but I'm more hoping my next computer can cope with a higher resolution.

================================================================

Conclusion

The conclusion is that I don't have a conclusion yet. The results I have now are the best I had so far, but still crappy compared to a good pre-baked result. The 2 biggest problems are the blur in dark area's (I already smell the complaints when the next demo will be released), and light-leaks + other imperfections. The results are sometimes unpredictable / incorrect. In other words, I still have to add secondary lights and other tricks to get the lighting as desired. Probably I can fix some bugs with fine-tuning. And the blur can be reduced by using a higher resolution for the final screenspace gathering step. My Desktop is dead so I haven't tried T22 on a faster computer for a year, but another guy from the team had proper framerates (40+), so I expect a modern computer can do miracles when it comes to reducing the blur. It’s very necessary, because despite high-res textures, T22 isn’t sharp compared to most modern games. The G.I. and somewhat poor SSAO are part of the cause.

The real question is, how hard does T22 really need realtime G.I.? Indoor area's aren't much affected by day/night cycles, you won't destroy walls, and there is little movement in general. On the other hand, typical dark horror area's may benefit from full dynamic lighting when playing with flashlights, damaged lamps, or torches. The new UDK engine (which uses VCT or some sort) seems promising when it comes to G.I. but I still wonder how good it really is. Is the effect correct enough, fast enough, flexible enough, and adjustable enough to be used in any situation? I doubt it. Besides, the goal of T22 shouldn't be beating a UDK or Crysis engine on tech features, because that is never gonna happen. The game just has to look good. Or scary actually, whether that requires realistic graphics or not. If pre-baked solutions will do a better job achieving that, we should use those instead. Yet it's so attractive to keep trying realtime G.I. and as the hardware keeps getting faster and faster, we might be able to upscale the current solutions to better looking, more accurate versions...

So, I can't decide really. Which isn't good, because such techniques have a big impact on the end results. Each time we jump to another G.I. system, the looks of the rooms we did so far will (completely) change, and have to be tuned again. "Fortunately" we didn't make dozens of rooms yet, but at some point we have to decide and trust the technique will still look ok X years later when the game is supposed to be finished. It sucks to have multiple options.

The VCT stuff is clever, but I haven't been sold on it due to the problems you've mentioned. In the end it just ends up being too expensive in terms of performance and/or memory to justify the drop in quality relative to static diffuse bakes. Indirect specular would actually be the more interesting benefit to me, since existing solutions for indirect specular are really bad for the most most part. I think if I were going to try for realtime diffuse GI I would go down the path of pre-baking transfer data for static geometry and then applying the lighting and bouncing in realtime.

ReplyDeleteBy the way, as far as I know Epic is no longer doing any pursuing VCT and is just using lightmaps now. I would suspect that they were also unable to justify the expense, especially given how flexible they need their engine to be for their licensees.

Hey MJP, nice to see someone here that has helped me plenty of times on Gamedev.net :)

ReplyDeleteYou are right about the (too) high cost on VCT. I didn't know Epic dropped VCT, but that makes me feel less like a loser if I go back to a (partial) baked solution as well hehe. I also wonder, did Crysis really use their LPV technique? I think not, but I could be wrong of course. But if they didn't what kind of pre-baked solution did they use?

The VCT cost might be "fixed" some day by evolving hardware though. I'm more curious about the correctness. The octree contains plenty of detail if you want, but as the cones get wider and wider, you have to decide whether the trace stops or continues when hitting a filled octree cell. This either leads to light leaks or missing incoming light. A real headache in any grid-based solution I think.

The glossy specular is nice indeed, though not always useful unless you do multiple bounces (or adding some fixed ambient). If doing a single bounce only, the stuff being reflected won't contain indirect lighting, leading to very dark or no reflections at all in dark areas. Also stuff not incorperated in the grid (particles, skyboxes, clouds, translucent stuff) won't be reflected. Usually not a big deal, although having a good sky in your reflections is a must for outdoor situations.

I'm not sure about Crysis 3 since I never played it, but Crysis 2 only used it for the PC version. But even in that version they still primarily used 'fake' bounce lights and probes to approximate indirect, and then the LPV just added a little bit of extra bounce from the sun. I had to look really hard to notice it.

ReplyDeleteThat makes sense about the glossy reflections. I think if I were to try it I would just pre-bake a few diffuse bounces and then bake that into the voxel grid. However another problem with using cone tracing for indirect specular is that it's a pretty rough approximation of an actual specular BRDF. A real specular lobe is not a cone, it's roughly Gaussian-shaped at head-on angles and takes on more complex shapes at grazing angles. I believe Cyril Crassin did something to approximate a Gaussian, but I'd have to read his paper again to be sure. However this would still give you fairly incorrect results at grazing angles since the lobe shape would be wrong and you'd be missing Fresnel effects. You can try to add the Fresnel in after the fact like you would with a cube map, but this is incorrect and will over-brighten the result for higher roughness values. You also won't get the long "streaky" reflections that you're supposed to get at grazing angles, which is a pretty key visual component for things like nighttime shots of water or wet streets.

Excellent post and very informative, thank you very much for this. I am also doing research in real-time GI and wanted to try voxel cone tracing and/or LPV since a while, but your post gave me a clear and complete picture of all the limitations that these techniques entail. I think it requires too much cleverness to cover up the artifacts and there are too many corner cases (no pun intended) to take into account that I will probably not bother. Do you have any opinions on the real-time screen space photon mapping technique from nvidia (http://graphics.cs.williams.edu/papers/PhotonI3D13/)?

ReplyDelete@MJP

ReplyDeleteI thought so that Crysis2 partially "faked" it. Would be too good to be true. But then again, we shouldn't forget that the end result still looks amazing of course. It's like magnicians. You know it's fake, but who cares? The act is amazing.

Baking one or few bounces into voxels for the glossy reflections is a good idea. Right now I'm using RLR and cubemaps instead of the GI techniques described above to get reflections, but this is something to keep in mind.

@Sam

Merci, glad it helped you! And also thanks for the link. Never heard of that technique so I'm reading it now. At a first glance, its sounds nice. But I'm pretty sure I can find some "close-but-no-cigar" cases... If I'm able to understand the technique that is (though the paper says "surprisingly easy"!).

Hello Rick, I had few months of break in reading of your blog but I am back again;) And I am really glad to see that you are still working on your game. That is awesome. Your posts are very informative and I think that your blog deserves more recognition than it has :)

ReplyDeleteWhat tools do you use to setup your scene? I am thinking about writting 3d game myself (totally different genre), well I've already started, but building everything from scratch takes a lot of time... and I do not have this time:( So I probably switch to use some free engine.

Let me know if you will be near by Gdańsk (Poland), we can drink a beer or two :)

When i go to Poland its usually in the South, very close to the Zywiec brewery :). A few of our harvesting (Ploeger) machines are running in the Gdansk area though so who knows

DeleteScene tools... I‘m using Lightwave to create 3D stuff, but most of the artists I know use a mixture of Maya, 3DMax and Blender. Let the artist decide which tool he wants to use, and make importers/exporters for that. A simple and often used format for example would be OBJ.

Scenes and Objects are loaded into a Map/Object/Particle editor I made myself, where we add engine-specific things. place lights, generate GI data, put furniture, assign materials et cetera. Making these editors is hard work though, especially making them a bit user-friendly so another can use it too. That‘s why a lot of people pick a game engine such as UDK instead, which comesautomatically with nice tools, documents and *working* software. Well, that‘s a choice to make. Is your goal to program and learn/play a lot, or do you care more about the end result, thus a game?

Ah Żywiec, nice ;-)

DeleteYeah, I know that those editors are tedious to make them user friendly. But making an engine is also a hard work. You know, I am working my ass off as a software developer on daily basis but not in a 3D world. I"ve made few 2D games in DirectX for fun. I've started to code my game in OpenGL but it takes a lot of time. Building your own fonts, or camera movement. Basic stuff and it took me like 2 day to figure out how to properly move my camera object.

In this tempo I will probably never start to code the content of my game. Advantage of an engine is that it frees you from groundwork but for the other hand you are loosing flexibility and probably often you will hit a wall because some things will not be achievable in the engine.

I thought I will do it in FreePascal and then compile on mulitplatforms but picking such language

makes it even harder because community is a much smaller and engines are pretty basic

(again because the community is much smaller).

So, to answer your question: I would rather to have fun and earn some money if it is possible:)

Are you still making your game in Delphi?

Groundwork indeed is huge. And it never seems to be finished either, although some parts like a camera system or audio are less likely to change once they got implemented "right". But then from a software-architecture perspective, even the definition of "right" changes over time as you gain experience.

ReplyDeleteThe T22 engine (still in Delphi7) has most base elements (resource management, graphics, physics, audio, walking with a player, script stuff, AI, ... ) yet none of those components is 100% finished. So in the end I code far less on actual game-stuff than I would like to.

That & the less user-friendly tools may keep rapid the production of game environments a bit down. So, I sometimes wonder if this is really the good way to do it. But as you mention, when picking another engine I probably have to drop a lot of small but subtle features that make this game different. And from a pure egoistic programming perspective, it would be less fun and less of an achievement for me personally :)

Rick, I think that for you there is no turning back now.;) Have you thought about Kickstarter campaign?:) I am sure that many folks would back you up with some cash. You have already a lot to show!

ReplyDeleteI thought about Kickstarter. You're not the only one who said that, and that's a compliment for this project really. But I'm concerned about 3 things mainly:

ReplyDelete- Not sure if it's (legally) possible in the Netherlands to just get a bag of money. Got to pay tax, whatever. Thing is, I have zero experience when it comes to setting up a business. Apart from the legal issue, you also have to manage the dollars in a proper way when it comes to paying helpers, buying software, or whatever the money is spend on. I'm sure there are people who can manage that, but... it needs to be someone I can fully trust.

- Say we collect 50k $. Sounds like a lot, but it really isn't. At least not if you want to give a somewhat reasonable per-hour salary to your helpers. One system could be to pay-per-asset. You make a monster for me, you get 5$. Can't be too much, otherwise the pockets will be empty soon as well. Just a little bit to stimulate. I like this idea, but it's still a lot work to regulate all of this properly, and we have to avoid people making shit and expect some dollars for it. Money can cause conflicts.

- I'm a very honest guy (really). And I would hate to receive money from fans, and give nothing in return. Of course I will try to pay you back with a game-release (or playable mini game at least), but I simply can't give any guarantees. I would feel bad about getting money now really.

I know honesty and being too careful won't get you far. Successful men are those who took risks. So I'm not saying Kickstarter is out of the question. But I really want a more solid foundation (thus having a team I know who can do the job) before involving money. Or come with a complete different target that is much smaller / more realistic than making Tower22. But I'm not so sure if I could give love to such a target hehe

Rick, regarding your issues:

ReplyDelete1. You are a smart guy, half an hour on google should give you answers regarding legality of such business. Or talking to the fellow guys from other kickstarter projects in Netherlands.

2. 50k $ is not much but...everything is dependant on the size of the project. I think careful planning will allow you to estimate cost of your game. It is simmilar to estimating the time needed for implmeneting a given feature. Of course you should add to this +30% as a rule of thumb, because always something can go wrong. Maybe you should start with a smaller game. You know, instead of full building make a four first floors. Plan the gameplay for 8 hours and sell the game for 7$. Smaller game = smaller costs, easier to estimate and easier to release.

The way I see it you have two choices - build your game forever or release something smaller but it will give you a starting platform for making something bigger:)

3. I think that honesty might be the key for Kickstarer. A lot of guys are willing to take the risk if you tell them the truth and show them what you already have.

Well, you're right about pretty much everything there. I'll just ask around on Gamedev or something and see what the experiences from others are.

ReplyDeleteAs for smaller, more realistic targets, we're not trying to make the whole game. It would be the ultimate goal, but in this state, it's simply not possible. I'm hoping to create a small playable part of the game, then sell it for a small price, or spread it as freeware. If people like it, it should be easier to have a second run with a bigger team, bigger goals, and eventually a bigger budget.

But looking at how long it takes to even finish a bunch of rooms, I'm wondering how big that portion should exactly be. How much is needed to make people curious for more? An action game can be fun directly, even if it's just a single stage. If the gameplay is addictive, it can quickly prove itself. A horror game like T22 on the other hand evolves very, very slowly. A random fragment of the game doesn't tell much.

Of course I can think of a particular scene, but it may give ~15 minutes of gameplay at most. Well probably that's the only entry point we really got. But I'm not so sure if people are willing to pay & wait for 15 minutes of "game".

A new demo movie will be released soon (said that 4 months ago already, I know, but really, something will come). I want to send that movie to a Dutch games magazine to get some more exposure, and thus hopefully get more team members. But that might also be the right time to query the audience for a Kickstarter budget and announce a simpler, smaller goal.

I think that is a good idea to expose content to some magazines, you could also tell about your Kickstarter capaign on Gamedev and pascalgamedevelopement.com.

ReplyDeleteFew rooms, one smart puzzle and you will have a lot of supporters trust me.

People are willing to pay for much less. Most of Kickstarter campaigns even do not offer any playable demos, just preseting some screens and telling "how awesome the game will be when you fund it" ;)

Look forward to see this demo movie:)

Well, I listened to the comments... Not quite sure how and when to start a KS campaign, but the next busstop will be a playable demo. First got to finish this movie though. Hopefully this movie will help gaining publicity & manpower.

ReplyDeleteAfter that I'll announce the plans. As you said, if we open for donations, we should be honest and transparent about the goals and expectations. The floorplans and ideas have been made for this demo, though it won't be exactly "few rooms". Unlike a boxing game where you can make a single boxing ring with 2 puppets, the T22 game style simply requires a somewhat bigger environment.

The voxel approach seems like a realtime version of the radiosity calculation :) Even in this last one you simplify the surfaces in pieces of blocks and then computer radiance of each of them with rays going around. It was not fast to have small blocks computed, now with more power it's possible to process more high quality results. Probably it'll take some years before voxels are mainstream in AAA games, or maybe something new will come out ;) Even new consoles have games really slow (~ fps), i don't think voxels will be the solution as they are now ;)

ReplyDeleteI think nowadays modern game engines uses irradiance probes, baking static or even semi-dynamic/realtime indirect illumination. I'd personally use them too, probes with static lighting, screen-space raytraced glossy reflections like in KillZone for PS4 (see their paper) for the enviroment, precomputed or semi-dynamic cubemaps for dynamic objects reflections, reflective shadow maps for simulating light bouncing for certain cases (ex. the player light), then deferred rendering for covering any other case with any number of lights you want :)

I don't think people notice much light bouncing, they are more attracted by volumetric lights, shadowed particles, good quality of textures, good shadows and of course gameplay :)

You're very right about "People not noticing much light bouncing". And that makes it a bit silly to spend so much energy and offer so many CPU/GPU resources on it. Having realtime GI really has become a technical feature to brag about your engine.

ReplyDeleteIn fact, a few weeks ago I started with a half-baked solution, having "GI probes" scattered all over the world containing pre-calculated incoming light. It's so much easier and faster in the end, athough I still plan to add some dynamic features to these probes so they at least react on light changes. Maybe that Killzone paper you mentioned has some nice hints!

I'm working too on an engine but it's years i read about this new paper or that games or demo and at the end i just made lots of "tests" more than building something and move on ;) Now that i've full time to dedicate on my project i decided to keep things simple and advance with as rigid as possible timeframes.

ReplyDeleteMy logic is to put everything in perpective of time and what i need to create. For example i'm trying to investigate better about Physically Based Lighting because it seems to make lighting/shading more "uniform" along different scenes so that you don't need to hack settings to have the results you want (ex. a metal in a scene is ok, the same one is too dark in another). Yeah, artists need to work a little bit different (ex. using a reference color table for knowing who to reproduce correctly metals and other types of surfaces) but it could speed up the processing.

There's a game called Dark Souls, made for consoles and then ported to PC, that have just lightmaps and very minimal use to dynamic lighting (i fear even dynamic shadows are next to null :P). But the game had so much success because the gameplay is very nice and the enviroments ispired. People are easily "distracted" if good games ;)

It's funny that next year a sequel will be released and, from the videos, they have implemented realtime shadows and rooms that can change lighting and people are very so excited that they comment like if it was realtime global illumination :)

The paper about KillZone is on the Guerrilla Games website and you can find a video on YouTube too where someone discusses it.

You can see also the paper about FarCry 3, they use different types of probes for outdoor lighting and "local" lighting. Probably a little complicated but interesting.

There's the "WebGL Deferred Irradiance Volumes" demo that updates lighting for a sponza scene when the sun is changed. Probably the easier example you can find around.

Then i'd look also at some documentation from the game Remember me where they talk about shading, reflections, wet surfaces and other things

http://www.fxguide.com/featured/game-environments-parta-remember-me-rendering/

http://www.fxguide.com/featured/game-environments-partb/

http://www.fxguide.com/featured/game-environments-partc/

Just yesterday i played Crysis 3 and in the first level they have a very nice rain scene, i was impressed! Even in your game some water dripping on some wall or rain drops falling from some holes in the ceiling could be cool :)

Looking around on the Killzone website now, thanks for all those links! I saw the Farcry 3 paper earlier, and something similar is in the make for T22 as well. But with a much more dense probe-grid. T22 uses narrow indoor environments whereas Farcry is a very open game of course.

ReplyDeleteIt's probably best to stick with a certain technique rather than changing all the time indeed. Then again I didn't found any GI solution I'm really happy with so far (T22 used some sort of realtime GI right from the beginning), so I'm still looking. And of course, time is remorseless. Something that looks good now, might be outdated 2 years later.

But I agree, more effort should be put into making a fun game rather than chasing the best graphics. It's a goal I won't reach as a solo-programmer anyway, and on top it makes the 3D-content production much harder. It's (too) difficult already to find people that have talent AND time for this project. I hate to take a step back, but if we want to reach something playable you can download, we might have to drop some bits. Though that comes at a price as well; something that looks good will attract audience (and artists) more easily as well.

On top, T22 is mainly about getting sucked into the environment. If the graphics lack or are inconsistent, it's also harder to drag the player into a certain horrible mood. Amnesia proved that you don't need superb graphics to create a scary game, but still... I like nice pictures :) All in all, it's hard to find the good balance.

I'm thinking, indirect lighting is one of these things that nowadays give you very little added value compared to the many months of effort you need to expend on it (to fully understand every paper on the topic and compare techniques + trade-offs etc etc) -- look, nowadays you can do in real-time:

ReplyDeletephysically-based HDR lighting with easy to implement dynamic environment diffuse+specular (like IBL but sample into your dynamically rendered skybox/cubemap mip-chain based on glossiness) which replace the old-school ambient term and fresnel reflection. Lots of legwork needed to make sure the correct mip is used, not too much and not too little is rendered into the cubemap etc. but no prob for realtime. Add normal screen-space techniques like AO and screen-space reflections for local (as opposed to the cubemap's global) reflections and you're pretty much set for indirect lighting, I propose.

Because this gives you *some* plausible light bounce on every surface. Any bounce light is better than none and absolutely sufficient for 99% of gamers. Only graphics PhDs can really tell whether proper indirect radiosity etc. was calculated to arrive at the correct mix of bounce light and glossy reflection, as opposed to oldschool cubemap lookups for diffuse-ambient and specular-reflective contributions.

Not sure what I'm missing... don't think GTA5 used any more specific GI approach at least on the last-gen consoles. Once you have diffuse-ambient + specular-reflective from cubemap, screenspace reflections and AO --- you have achieved a very pretty-looking "the visually important 97% of what GI is shooting for".. I think :D

The months/years effort being spend on realtime G.I. is not really the issue, I had fun trying it (and a bit of frustration seeing the results every time), and eventually you could dedicate a programmer to it.

ReplyDeleteBut indeed, it still adds little value. In fact, it will cost you performance, quality, and also a degree of control the artist should have. The bad thing about static lighting is, well, its static. The good thing is that static lighting at least can be tuned exactly to your taste. Dynamic G.I. on the other hand can be hard to predict, not to mention the good dose of artifacts it usually brings.

And then when you ask yourself if a (correct) realtime solution is really needed... Indoor area's don't change a lot unless you want fully destructable environments, and for outdoor day/night cycles you can still make semi-realtime solutions to appxorimate indirect light from the sky / sun / moon. In the case of Tower22, it would be nice if a flashlight adds some indirect light as well, but other than that its not absolutely necessary to have it.

T22 uses screen-space reflections, SSAO, and a blurry realtime updated cubeMap for reflections. It shades the corners and adds reflections and a gloss to more diffuse surfaces, although its not a valid substitute for G.I. on itself.

What I did (and I believe most other games nowadays do something similiar) a couple months ago, is making a "probe-grid". 1 to 4 probes are placed per cubic meter, or less in wider rooms. Each probe pre-calculates the incoming light from 6 directions, and stores it in some volume textures. Plus it adds a dynamic amount of skylight, depending on how much% skylight it can see, and what the current skycolor is. Not much realtime, but at least its simple and fast. And a nice extra benefit is that all your objects or particles can sample indirect light from those volume textures as well.

The system is setup to get more realtime (if needed, and it it looks good enough) by storing relations between probes. A probe knows roughly which surfaces are reflecting diffuse light to it, and thus it knows from which probes to sample. If you inject direct light into the probes first, then you can scatter it around quite easily for multiple bounces. But if it will really look nice... you can only store a limited amount of relations per probe, before killing the memory of course...