Well boys and girls. If you think the Tower22 engine ("Engine22") is fast as hell, ho-ho-ho, no. To the facts; currently the "Radar Station" maps run at a pounding ~17 frames per second on my laptop. Of course that depends a bit on the view. When staring at an empty corner, the speed may be an acceptable 28 fps. When looking at multiple rooms that also contain a dense cloud of litten particles, the laptop switches over to Burger-King-gravy speed: ~12 or even less.

The laptop is getting old though. 32 bits, and moreover, "just" a GeForce 9800M GTS. A game like Crysis Warhead doesn't run smoothly either, though faster than this. My other desktop computer gets far better results. It's also a 32-bit dual-core relic from the past, but with a better video card (EVGA GeForce 4700 GTS). The first Demo movie for example ran about 25 frames per second on the laptop (the dark empty corridors faster though). And near to 60 fps on the desktop. Another comparison. The "Radar Station" we're working on now (almost finished!) started at 25 fps on the laptop. And now after adding more textures, more effects, more lamps and more objects, the framerate dropped to the ~17 mentioned earlier. No idea how it will behave on the desktop, but I guess it will be "acceptable". Well it better damn be! Otherwise we'll get that annoying motion-blur again due the relative large cycle intervals ;)

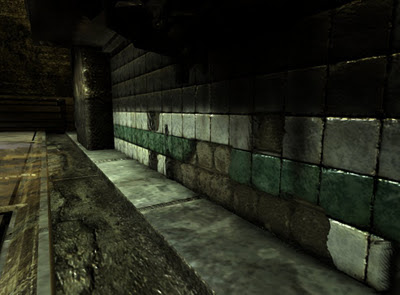

Compare this to this. At least the framerate dropped for a good cause :)

For gaming, such a low framerate sucks ass of course. Ever played Doom1 on a 386? You wanted to play the game so badly so you kept pushing, but the PC really said “please no! Stop it!”. For development though, a lower speed is "ok". I'm not playing, just flying through the maps to check coding adjustments or to decorate the maps. That's also the reason why I barely touch the much faster desktop computer. With a laptop, you can at least code in bed, in bath, on the toilet, while being kidnapped by al Qaida, or wherever you are. So, I prefer mobility over speed in this case.

Also have to notice that "dropping performance" happens in a gradual way. It's like getting fat; you don't gain 10kg after a week of snacking (unless you drank 6 kegs of beer in a mug like this -blurp- ). It's not that the framerate suddenly cripples when adding effect-X. Nah, usually it just slows a tiny little bit. You barely notice it, so, nothing to worry about. But all those tiny bits together...

As said, the performance cannot be compared to commercial engines. Simply, I have too little time and less experience to make it lighting fast. Then I don't worry that much about it either. It will still take years to finish this game, so performance tweaking right now is a bit of a waste of time. 2 years later there will be faster hardware, plus some of the techniques we're using might be replaced by then. Especially things like (ambient) lighting And the parallax effect showed earlier also keeps getting smarter, faster algorithms. So, I'll just take a look at some of those techniques in a few years from now again. Well, in fact, 1 or 2 graphics-programmer experts can help by then. Optimizing shaders, give professional advice, find bottlenecks, et cetera. But at this moment, maximum performance is certainly not a priority.

No, the damage on those tiles wasn't there first. It's just a flat decal, faking depth with Parallax Occlusion Mapping...

Nevertheless, that does not mean we just blindly adding features. First of all, an effect needs to be worth it. There are dozens of papers that show awesome stuff. The next best things to sliced breath... ahum, in theory. But more than often they come at a high cost, and/or require a very specific implementation that may restrict other things in your rendering pipeline. Take BRDF's for example. By using real-world sampled data and specific formula's, you can create a (far) more realistic look on your materials. Gold doesn't reflect light in the same way as velvet, concrete or a freakn grapefruit for that matter. Yet most games treat all the surfaces with the standard "Blinn" or "Phong" lighting model (which explains the plastic or metal look on softer surfaces such as skin or wallpaper). Why not using BRDF's then? Well:

- Hard to fit in a deferred/inferred rendering pipeline (cause the lamps have to check on each pixel what kind of material they were made from, then have access to the BRDF parameters somehow)

- Only 0,1% of the artists knows how to make BRDF (image) data. And most of the programmers don't know how-to either.

- And even if they did, it requires fucking professor hardware for the BRDF acquisition. You can't just draw them by hand you know...

- As for the end-result (thus with several lamps, blurs, SSAO, ambient, reflections, post-filters and a ton of other effects applied), an untrained viewer barely notices the difference between a concrete wall rendered with Phong or Oren Nayar.

So is it worth it... Hmmm... Eventually probably yes. But as long as we need the performance elsewhere, techniques like BRDF, or slightly sharper shadows are usually exchanged for more noticeable things such as sexy blurs or being able to put more objects in the scene. The Parallax effect is discussable too. Google for "Parallax Occlusion Mapping", and you'll see that the coolest screenshots always placed the camera right next to a brick wall or on the floor. But how many times will you see surfaces from that close really? When you got killed and fall onto the ground maybe, but otherwise the parallax effect, and certainly the subtle "self-shadowing" effect is hard to notice. Yet it comes at a very high cost. That's why we only use it for rough surfaces with obvious height differences. It's a pretty cool effect, and hardware starts to allow it. But if you run out of rendering-power, POM would be one of the first things to ditch I'd say.

Cool, POM and shit(Parallax Occlusion Mapping). But do you still see the effect now? Same wall (below the sink), but zoomed back to a more realistic viewing distance...

Second things about maintaining our performance. When I do add an effect, I'll try to do it in a smart way. GPU's are powerful but hate to get interrupted. It's not like a multi-tasking woman that can phone, cook, chat, watch the kids, and clean the house at the same time (at least she thinks she can). Best is to create batches and do as much stuff as possible in one call. Sort out data, try to render bigger chunks with the least "state changes" as possible. That's pretty hard these days with so many different effects being pre-processed in the background. ShadowMaps need to be prepared, Animated objects such as cloth or characters may need to stream their data to a VBO or texture first, you'll need depth, velocity and all kinds image data before you can render SSAO or motion blur, and so on. What you see in the Tower22 screenshots is only a small portion of what really happens on the GPU. Before it starts rendering screen content, it draws shadowMaps, cubeMaps, mirror(for water reflections), and plenty of other things.

To prevent getting slower than a tranquilized panda, these steps are combined if possible. With MRT (Multi Render Target) different types of data are rendered to different textures, but in the same pass. Which means I only have to call the GPU to draw the world geometry once. I also sort the steps on screen resolutions so that the GPU has to less switching between Frame Buffer targets on different resolutions. Just a grasp.

Yet another trick to speed up is sparing bandwidth by using compressed textures, or keeping the geometry as much as possible on the videocard itself with VBO's (also for skinned/animated things). The latter means that the triangles and additional vertex data for, let's say a monster-model, is already present on the videocard. So we don't have to re-send all the triangles from the RAM memory to GPU memory (via the CPU) each cycle again.

Texture with particles, rendered in the background on a lower resolution

Whatever you do, don't just do it without thinking. Often there are multiple ways to achieve the same thing. So before I just implement something, I usually ask around (on Gamedev for example) for some tips. One last example. Particles. The old fashioned way is to render a large amount of (transparent) points or billboard sprites(quads) into your world. A whole bunch of them forms a cloud, bloodspray, smoke, puke, waterfall or whatever you had in mind. But because so many quads are drawn on top of each other, the GPU easily suffers from fillrate problems. The same screenpixel gets treated dozens of times, and that hurts. One trick to reduce the amount of calculations is to draw the particles offline, on a smaller texture. Then in the end, draw the texture that contains all particles on top of your scene. Since the offline texture has a lower resolution, less pixel calculations have to be done. Of course, the quality(texture sharpness) also drops but for foggy/blurry stuff like fart gasses or smoke, this is actually a good thing. Two portions of happiness for the price of one.

Don't know what speed the desktop computer will reach when we record the next Tech-Demo movie. But at the end of the story, I'm focused on making Tower22. Not a super engine. Let's first just build a car that drives properly, rather than a car that drives 300 miles per hour. Besides, let those lazy Silicon Valley’s bake better video-cards instead :p Nah, of course, you can't totally neglect the performance in a game engine. If that car needs to drive faster than 150 mph eventually, you shouldn't start with a Fred Flintstone chassis prototype. But squeezing out milliseconds will be future work.

Thanks for the post, i think it's still pretty fast. I have been using CG and it's been really slow, but then again i haven't also tried batching yet, only geo batching using atlas textures for static object with no particular effect.

ReplyDeleteI'm working on a new engine for myself to support better sorting/batching, taking Horde3d approach(ubershaders).

I love the idea about the particles, i was thinking about offline/low resolution rendering for objects in far distance, but hadn't though about particles yet.

Can't wait to see your next tech-demo, it should look awesome.