This (beginners) tutorial is written for Engine22 users, but also if you just want to know more about making games, engines, and 3D graphics. More tutorials will follow for sure, and can be found on Engine.Fuel22.net/Help/ (and at the time of writing, the link isn't up yet!). Have fun!

Remember Vectors from physics classes? Don't worry, neither do I. No but seriously, I'm a loser when it comes to math. Some parents say their kid is like a sponge. I'm more like bowling ball, without holes. If I don't apply it immediately, my brain does a toilet flush and the gained knowledge exits the body through farts or nose pickles.

“Apply it immediately”... what healthy 16 year old applies Pythagoras or Fresnel lens formula's for his daily problems? Only Newton's gravity law seems to apply sometimes, when falling drunk of your bike. E=MC2 in your face, stupid. So, there we are. In a classroom, thinking about the next part in Half life(1), staring at girls boobs, drawing idiotic doodles on a piece of paper, waiting until you can finally leave that sweaty moldy reeking place. Somewhere in the background, an old teacher with glasses is drawing "arrows" on the chalkboard. Whatever man.

But who would have thought that those arrows are called "Vectors", and that I would use them on a daily base, 17 years later? Just as realistic as using the leapfrog you learned at gymnastics.

As said, I'm just not that good at math so I won't be able to explain all the fine details about the math behind vectors. Then again, not being a professor, I can hopefully teach you a few things in human-language. While I'll put on my glasses and walk to the chalkboard, please stop chewing bubble-gum, stop doodling on your note block, and pay attention class. It's not that hard really, and if you want to make a game, you'd better stop watching your neighbour’s boobs, and focus on the chalkboard. Ahum:

Points

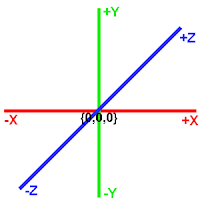

Earth is flat, and coordinates in space are made of 3 components: x,y and z. Don't believe me? Look at your finger. Move it to the left. Now it moved into a negative X direction; your finger's X-coordinate decreased. Move up. Your finger's Y coordinate increased. And how about that Z component then? Simple, just move your finger backwards, away from you. Z, not from Zorro but "depth", coordinate increased. From your perspective at least. In your bedroom door opening, your mom is looking at you now, and thinking what the hell you're doing. From her perspective, that finger moved into different directions, and she is about to call an ambulance.

So, a position, also called "point", can be defined as {x,y,z}. A 3-component struct in programming terms, like this:

// pseudo Engine22 notation

eVec3 = record

x,y,z : eFloat;And a point can be in different "spaces". In general, we have "local space" (or "model space") and "world space". The finger example explained the coordinates, relative to your own position. Move it up, and Y increases. If you were the centre of the universe -as your mom always says-, your body would be at {x:0, y:0, z:0}. But you're not. For a Chinese on the other side of the world, your finger is going down instead of up.

end;

That

is... if the Earth was the centre of the universe. Which isn't either. In

real-life, coordinate systems are always sort of local. It really depends what

you take as a centre point, and also what direction is accounted for

"forward", "up" and "left". If you hang upside-down

like a bat the whole day, "up" might get a different definition.

Games

are a bit easier. We just take a random point as the centre. Or well, random...

On a GTA map, you may decide the bottom/left corner, at sea level, would be

{0,0,0}. For Tower22, the centre of the ground floor is the centre of the

"world". Game-world. When going up, Y goes higher. But there also

games/3D programs that take Z as "up". It's arbitrary, and it doesn't

really matter just as long your 3D models and programming math all accept the

same rules.

In a

game-context, "local" coordinates usually apply on/within a 3D model.

So we make a large outdoor area. And we place assets in them. Cars, light-posts,

garbage containers, trees, cats, et cetera. Each object gets a "world

coordinate". However, earlier while creating these objects (say we were

modelling a car), we didn't know if/where and how these objects are placed within

the world. We just pretend the world is gone, and now the centre of the car

itself becomes the "centre of the world". So, probably the handbrake

would be somewhere near that centre. The front bumper, is placed forward from

the centre, thus having a larger Z coordinate. The rear bumper,

"behind" us, would get a negative Z coordinate.

Now when

we place this car in our world, we can rotate it 180 degrees. In

local-car-space, the handbrake is still the centre, and the bumper is still

forward at a higher Z coordinate. But in world-coordinates, you'll get a whole

different picture.

So, in

practice, every object in your world gets an "absolute",

world-coordinate. Physics, movement,

placement, and all that kind of stuff will be calculated with world-positions,

world-vectors, and world-matrices (more about that later). But sometimes, you

also want to do something in local-space. Or convert from local- to world

space, or vice-versa. Example. Your NPC has a sniper rifle, and wants to blow off

your head. That happens. Your "head" is a sub-part of your

"player-body" object.

The NPC knows your player world-coordinate. But next, the exact head-position

depends on what the player is doing. If he is in "prone" pose, the

head will be low at the ground. If he is licking his own balls, his head will

be somewhere in the middle of the whole body-object. The body-object, which

drives such animations, knows the local head position. In order to make a good

shot, blowing of your head while you were walking on hands with your legs

around your neck, the local head coordinate needs to be transformed to world

space.

Jesus, that

sounds hard. Yeah, it kinda is, and I won't explain the math now. But to do so,

you'll need vector & matrix transformation calculations. Which are

fortunately very common in any game engine, so you don’t have to reinvent the

wheel. For now I'll just illustrate what can be done

with

points. And I think... that I'm pretty much done. But to finish this story,

let's throw some random (Engine22) code examples to get an idea:

// Misc. Point code

function makeYourPointPlease(

const x,y,z : eFloat ) : eVec3;

begin

result.x := x;

result.y := y;

result.z := z;

end;

procedure movePointToTheLeft(

var pnt : eVec3; const distance : eFloat );

begin

pnt.x := pnt.x -

distance;

end;

function distanceBetween2Points( const A, B : eVec3) : eFloat;

var x,y,z : eFloat;

begin

x := A.x - B.x;

y := A.y - B.y;

z := A.z - B.z;

result := Sqrt(x*x+y*y+z*z);

end;

Vectors

A point

is just a... point, somewhere in a space. And you mainly need them for

- A.I. navigation

- Physics (movement, jumping, collision

detection, ...)

- Tell video-card WHERE to draw

- Animations

Now to

the Vector. In terms of programming, we can notate it the same. In fact, points

and vectors are often the same struct or class in engines. In Engine22, a

"vector" can be a vector(duh), but also a point or RGB colour.

Anyhow, vectors can be visualized as arrows. Yes, that old man with glasses on

the chalkboard wasn't fooling after all. A vector defines one or two things:

- Direction: arrow point to the

left, upwards, a bit backwards, ...

- Force: The vector length. The

longer the vector-arrow, the faster it moves into that direction

Note

that "normalized" vectors do not define a Force or Strength, only a

direction. A normalized

vector

is made in such a way that the Force/Strength/Length is always 1. So a vector

pointing to the left, would be noted as {x:-1; y:0 z:0}. An upwards vector

would be {x:0 y:+1 z:0}. Polygon “Normals” (the direction its facing), is an

example of a normalized vector.

The Matrix

Normalized

vectors are used a lot for defining directions in drawing, physics, and rays in

graphics(shaders). You see, objects usually don't only have a point, but also a

certain direction. Looking from helicopter perspective, you could rotate a car

360 degrees. This angle can be encoded into a direction vector. Although you

would need 2 more vectors for the full picture, as you could also roll and flip

this car, in case it crashes or something. This is where Matrices are used for.

A (4x4) matrix defines 5 things:

turn / roll / pitch rotation vectors

position

scale

Basically

a matrix is constructed from multiple vectors. In Engine22, every asset has a

matrix. When you

place a new object into your world, you define its position, you can rotate it,

and eventually scale

(shrink / enlarge) it. In other words, you'll be adjusting its matrix while

moving it around your mouse or keyboard.

This

same matrix is fed into the physics system, so it knows where everything is,

and also

shaders

will get this matrix so they can position your object correctly on the screen

(if visible

at all).

And back to Vectors

Matrix-math

is quite a bit harder than vector-math, so let's spare that for another time,

and continue this tutorial with some practical programming examples that show how

these direction vectors can be used. Let's move bitch:

procedure movePointUpwards( var

targetPoint : eVec3; const unitsUp : eFloat );

begin

targetPoint.y :=

targetPoint.y + unitsUp;

// Note it would

move down if you give a negative number

end;

procedure movePoint( var

targetPoint : eVec3; const forceVector : eVec3 );

begin

// Assume the

forceVector is NOT normalized,

// thus having a

length/strength as well

targetPoint.x :=

targetPoint.x + forceVector.x;

targetPoint.y :=

targetPoint.x + forceVector.y;

targetPoint.z :=

targetPoint.x + forceVector.z;

end;

procedure movePoint( var

targetPoint : eVec3; const direction : eVec3; const speed : eFloat );

begin

// Direction

vector is normalized here, multiply with speed

targetPoint.x :=

targetPoint.x + direction.x * speed;

targetPoint.y :=

targetPoint.x + direction.y * speed;

targetPoint.z :=

targetPoint.x + direction.z * speed;

end;

Units in 3D space

So... if

we move a point "20" to the left... then how far did it move exactly? My old

physics teacher would outrage when writing a number without its corresponding

unit. The mass of

this block would be "10" mister. "10 what?! 10 ounce? 10 grams?

10 donkeys?!". "Kilograms

mister". "Then say so". And of course, he was right. But still

an asshole.

The guys

at OpenGL or DirectX didn't listen very well to their teachers though; there is

no

pre-defined

unit for 3D coordinates. They basically say: "You figure out.". So,

if we move

that

point "20" to the left, we should decide ourselves if those are inches,

meters,

nautical

sea miles, or donkeys. In Engine22, "1" = "1 meter". But in

another program it could

just as

well be millimeters. So, it's important to pick 1 standard for your unit system, and eventually

scale up/down imported

models that come from a different 3D package. Lightwave also uses meters, but

3D Max centimetres

if I'm not mistaking, so an imported model would be 100x bigger if you forget

to downsize.

procedure checkInput( );

var movementSpeed : eFloat;

begin

movementSpeed :=

3.0; // meters

// Move our

player with the arrows, over the X and Z axis

if (

keyboard.leftArrowPressed ) then

movePoint(

player.position, vec3(-movementSpeed,

0,0 ) );

if (

keyboard.rightArrowPressed ) then

movePoint(

player.position, vec3(+movementSpeed,

0,0 ) );

if (

keyboard.downArrowPressed ) then

movePoint(

player.position, vec3(0,0,

-movementSpeed ) );

if (

keyboard.upArrowPressed ) then

movePoint(

player.position, vec3(0,0,

+movementSpeed ) );

end;

Stop. DeltaTime!

The

procedure above has a little problem. Assuming that your game runs at 60 frames

per second. And you'll be checking input every cycle. Thus

"checkInput" would be called 60 times per second. Since we move

"3.0 meters" every time, your player will go in warp-drive; holding

the key for 1 second would move him 3.0 * 60 = 180 meters per second! That's a

bit too much, don't you think.

Now we

could simply reduce the "movementSpeed", to avoid super-human speeds.

0.03 for example would lead to 0.03 x 60 = 1.8 meters per second.

But this

still stinks. What if you run your game on slower computer, that can only reach

a lousy 20 FPS at times? 0.03 x 20 = 0.6 meters per second. In the worst case,

your FPS fluctuates, because you're downloading internet porn at the same time,

causing hitches. Or how about this? You'll buy a quantum computer that easily

reaches 1000 FPS. Now suddenly your guy moves with 30 meters per second!

Ever

tried old DOS games on an emulator? You may have noticed that some games seem to

play in fast-forward. That's because they didn't guard their speeds. One trick

is to introduce a maximum FPS. Another (better) trick is to calculate

"DeltaTime". In Engine22, most "update()" functions will

get a "DeltaSecs" argument with them; the elapsed time in seconds

between the current and previous cycle. So if we're at a steady 60 FPS,

DeltaSecs would be 1/60 = 0.0166667. Now check this:

procedure checkInput( const

deltaSecs : eFloat );

var movementSpeed : eFloat;

begin

movementSpeed :=

3.0 * deltaSecs; // meters PER SECOND

// Move our

player with the arrows, over the X and Z axis

if (

keyboard.leftArrowPressed ) then

movePoint(

player.position, vec3(-movementSpeed,

0,0 ) );

if (

keyboard.rightArrowPressed ) then

movePoint(

player.position, vec3(+movementSpeed,

0,0 ) );

if (

keyboard.downArrowPressed ) then

movePoint(

player.position, vec3(0,0,

-movementSpeed ) );

if (

keyboard.upArrowPressed ) then

movePoint(

player.position, vec3(0,0,

+movementSpeed ) );

end;

Problem

fixed. We multiply our original "3" with the elapsed time, so it gets

a small number, 0.05 if the FPS was 60. And how much is 60 times 0.05? Exactly,

3. We moved 3 meters over a second.

Vectors in Engine22

You saw

some of the basics. How to set a point, apply some movement, a bit about local

versus world-space. There is a lot more you can do with vectors and matrices

though. And therefore one of the basic elements each game-engine should have,

is a Vector Library. And so does Engine22 of course. You already saw the

"eVec3" type a few times above, which stands for "eVector3".

That thing is being used all over the place, and has a bunch of handy help

functions.

eVec3 is

just a record with 3 floats. Note there is also an integer/byte/float32

variant, as well as a eVec4 type that has an extra W component. One of the reasons

Engine22 won't work anymore in Delphi7, is because I made thankful use of

record operators & functions, which wasn't possible in the old days. The

means you can call functions and do math with vectors:

var v1, v2, vResult :

eVec3;

begin

vResult := v1 +

v2; // Sums up (v1.x + v2.x,

y+y, z+z)

vResult := v1 -

v2; // Subtracts (v1.x - v2.x,

...)

vResult := v1 *

v2; // Multiplies (not CROSS!)

vResult := vec3(

10, -4.2, 90); // Constructor

vResult :=

v1.norm(); // Get normalized vector

string := v1.toString();

vResult.fromString(

"10 -4,2 90" );

x := v1.dot( v2 );// dot product

distance:=

v1.dist( v2 ); // Distance between v1 and

v2

lookDir :=

v1.lookat( targetPosition ); // Gets

direction towards target

length := v1.len(); //

Get vector length/strength

if ( v1 = v2 )

then vectorsAreEqual;

if ( v1 > v2 )

then v1IsStrongerThanV2; // Compare forces

...

end;

Another

nice feature is that you can use the same vectors for RGB (or eVec4 for RGBA) colors.

Instead of typing "vector.x", you can type "vector.r" as

well. It does the exact same thing, but makes more sense in terms of writing.

Vector colors are often used as input parameters for shaders and graphics. Note

that 0 stands for black, and 1 for 255 (white) here, as we use a floating point

notation instead of bytes.

And about 3D models, did you know that...

3D

models (the stuff you make in 3D Max, Lightwave, Blender, Maya, ...) are

basically just arrays of vectors? A polygon is made of (typically 3)

"vertices". Where a vertex could have a position, a texture

coordinate, a normal-direction, maybe a tangent, maybe a weight? So, writing it

down:

Vertex = record

position : eVec3;

textureCoord : eVec2; //

Only 2 coordinates (U and V)

normal : eVec3;

tangent : eVec3;

weight : eVec3;

end;

ModelMesh = class

vertexCount : eInt;

vertices : array of Vertex;

end;

It's not

exactly how the Engine22 VertexArray classes look like, but it's not far away from

that either. When loading a 3D model, you'll be making arrays of vectors. When

rendering the model, you'll be pushing those arrays to your videocard. Just keep

that in mind.

It's not

exactly how the Engine22 VertexArray classes look like, but it's not far away from

that either. When loading a 3D model, you'll be making arrays of vectors. When

rendering the model, you'll be pushing those arrays to your videocard. Just keep

that in mind.

So much

stuff you can do with them. The E22_Util.Base.Vector unit is one of the most common

included units. And even if you're not a math genious, you'll get comfortable with

them quickly.

To

conclude, our deadly sniper headshot:

procedure

sniperHeadShot( targetEntity : eES_Entity );

var localHeadPos : eVec3;

worldHeadPos : eVec3;

localGunPos : eVec3;

worldGunPos : eVec3;

targetDirection : eVec3;

targetDistance : eFloat;

bulletPos : eVec3;

traveledDist : eFloat;

begin

// Get absolute

head position

localHeadPos := targetEntity.getLocalPoint( HEAD );

worldHeadPos := targetEntity.getMatrix().localPointToAbsolute(

localHeadPos );

// Get our gun

position

localGunPos := sniper.getLocalPoint( GUN_NOZZLE );

worldGunPos := sniper.getMatrix().localPointToAbsolute(

localGunPos );

// Get the

(world) direction and distance between our gun and the head

worldGunPos.distAndDirectionTo(

worldHeadPos, targetDirection, targetDistance );

//

Simulate bullet path

bulletPos := worldGunPos; // Start here

traveledDist := 0.0; //

Traveled distance so far

while ( not

collided ) and ( traveledDist < targetDistance ) do

begin

//

Check if our path is clear

collided

:= world.rayTrace( bulletPos, targetDirection, bulletSpeedMS * deltaSecs );

//

Move forward, with the speed of a bullet

bulletPos

:= bulletPos + targetDirection * bulletSpeedMS * deltaSecs;

traveledDist

:= traveledDist + bulletSpeedMS * deltaSecs;

end; //

while

if ( traveledDist >= targetDist )

then begin

//

Reached the target

targetEntity.dieMotherFucker();

end;

end;

Of

course, that's bullocks code, but it does give an impression of how to work

with vectors. The vector-related functions shown are in E22 at least.

Thanks for this tutorial. Even after reading some OpenGL books I am still having problems with understanding how to scale, move and rotate my objects. They often ended up in wrong position, damn, this is frustrating :)

ReplyDeleteThe real magic is usually done with matrices (or quaternions), which are quite a bit harder than vectors. Can't say I have a 100% understanding of them either, but the good news is that once you made (or found) a library, you're good to go for most things.

ReplyDeleteWell, glad I could help a bit. Let's think about a topic for the next tutorial!

Yeah, the problem with me is that I feel uncomfortable when I'm using something which I do not understand completely. Quaternions are matrices 4x4?

ReplyDeleteNext topic, hmm... probably it is to far but I have problems with understanding how to apply more than one texture to polygon. Or how to optimise 3D terrain (and objects) which is far away? Or how to make a terrain which has more then one level of height(a cave, or bridge for example). I could go one like this forever, but I know that those are not the "core" concepts :)

There are billion things that can be explained in the wonderful world of (3D) games / engines.

ReplyDeleteAs for using multiple textures - (fragment) "shaders" is the one and only answer nowadays. With the old fixed OpenGL pipeline, you could activate multiple texture-units and bind textures. But with the fixed pipeline being ditched, shaders took over.

As for optimizing terrains, again tessellation shaders are the modern answer. It can measure distance between you(the camera) and the quad/triangle to render, and sub-divide it X times, as well as reading height from a texture or do interpolation. Nearby stuff gets tessellated a lot, distant stuff not.

Now I didn't really implement terrains in Engine22 yet, as Tower22 is an indoor game mainly. But also here several tricks are used to gain speed by reducing detail for distant "sectors" (rooms). Each entity - a wall, floor, furniture, monster, ...- can hide itself after a certain distance, or toggle to lower-polygon model. Also the materials applied on distant objects use a simplified variant. For example, effects like normalMapping can be disabled for distant entities.

Multi-stores. I sense you're trying to render a terrain, using a heightMap? With a heightMap only, its more or less impossible to do multiple stores. Games often do a hybrid between heightMaps, pre-fabs and other mapping techniques. As mentioned above, Engine22 splits up the world into "Sectors" (rooms, corridor sections, a patch of ground, ...). These sectors are usually made in a modeller program like Blender/Max/Lightwave/Maya/ so the shape can be anything really, as well as terrains. When imported into the game, each sector has a list of entities (walls, floors, doors, furniture, junk, monsters, items, cars, ...), lights, particles, audio-emitters, decals, and so on. Physics are done by the Newton physics engine.

Thank You Rick for sharing your knowledge, much appreciated. I,ve never experienced with shaders. I saw some interesting article about making a Total Annihilation game and the tricks how to improve terrain quality by keeping additional texture info in Alpha channel. I admire your work because you need to know not only Delphi but also C++ to be able to transpose examples.

DeleteIn my case I am generating terrain procedurally and thinking about some problems:

I am generating it from triangles, not quads. Will it complicate things for me in the future?

I have rivers, how to put bridges over them? That makes terrain a multi store, how to implement pathfinding for it?

I have one big mesh terrain, how can I optimise it?

Does are generall problems which I keep in my mind, you gave me some tips. Thanks.

BTW. I am writing this being on a Polish wedding after drinking a half liter of vodka, so sorry if I am not making to much sense :)

Bridges:

DeleteJust like trees or rocks, I would make bridges or buildings as "pre-fabs" or objects that can be placed on top of your terrain. Just placing them is probably not a real issue though. Getting the physics done, and having your A.I. understanding that this is a bridge can be crossed, is more tricky.

When using a physics engine like Bullet, ODE, Newton or nVidia PhysX, it can check collisions for you, so you can walk or drive over that bridge. For your terrain, you'll have to add a custom collision detector that uses the same procedural math as your shaders so you can pinpoint the height at any given spot.

For A.I., it depends on the chosen techniques. Games often use a navigation-mesh (think about polygons that make up the floor) in combination with A* pathfinding. The mesh tells your A.I. where it can walk, so the mesh has to cross over the bridge as well.

Such a mesh can be made manually, by hand, or gets automatically constructed. But I'm not sure how to do that properly in combination with a procedural terrain that can be different each time...

Optimizing

1. Use shaders & VBO's, really. In case you're looping through thousands of vertices each cycle right now, I bet you can make it 100 times faster with shaders. The thing is, video-cards (and computers in general) love to batch things. When looping and rendering triangle-by-triangle, you're interrupting all the time. CPU loops are not-done.

Also make VBO's for all your objects.

2. Apply LOD on your terrain, via tesselation shaders. Or if you pick the Engine22 approach, you can switch over to a lower poly variant of your (terrain) mesh when its being rendered in the background. Lower-poly meshes can be generated automatically in many 3D modelling packages nowadays.

3. Apply LOD on your objects (trees, plants, rocks, bridges, light-posts, structures, ...). Do not render them if thy are too far away and/or small. And switch over to lower-poly versions after X meters. Engine22 can use up to 5 variants for each object, so you have a "very nearby", "nearby", "medium distance", "far away" and "ver far away" version.

4. Sort / grouping

Switching mesh / textures / shaders / materials all the time is killing. Better to sort. First render the terrain, then all "tree01" objects, then all "tree02", and so on. The leafs on trees can be a bit tricky regarding transparency, but that's another story. Don't use blending - just alpha-testing, and you'll be fine.

5. If you have lots of objects (which is typically true if you want to render a forest or something), use OpenGL instancing. What you're doing here is:

- sort (as shown in 4)

- put all matrices from that sorted group in an array (object[0].matrix, object[1].matrix, ...) and push that towards the shader you're about to use

- put all VBO handles from that sorted group an another array

- tell OpenGL to render these VBO's, using instancing

Had a wedding too here last Friday, though not with Polish vodka. Got almost killed by that stuff before (in Poland), no more vodka for me!

ReplyDeleteI would really advise to have a look into GLSL shaders (in case you;re using OpenGL). Yes they require different thinking and some programming to get set up. But once you're a bit comfortable with them, you can do so much more. Mixing textures on a terrain for example, is peanuts. In fact, one of the first things I learned with shaders was doing a multi-textured terrain, which was a lot easier than doing it the "old way", as explained here:

http://www.gameprogrammer.net/delphi3dArchive/terraintex.htm

Procedural Terrain:

Again I don't have a whole lot of experience with terrains or procedural methods in particular, but with modern techniques, I would try something like this:

- Make a large flat( thus not shaped yet!) quad-grid, store it into a VBO

- render the VBO around the camera (moving along with the camera, but snapped on a grid).

- Use a tessellation shader to sub-divide nearby quads X times

- In the Vertex and/or Tessellation shader, you manipulate the height per vertex. - Basically you'll transfer the procedural math. You can feed your shaders with noise/height or other info textures to help doing the math.

- A fragment(pixel) shader blends between a set of textures.

You will notice that this is a LOT faster than having the CPU looping through arrays. Exactly why GPU's & shaders took over from the CPU.

The learning curve to get into shaders is a bit steep, but the good news is that there are thousands of tutorials out there. Personally I always liked the nVidia OpenGL or Direct3D SDK's, they bring a lot examples with full code.

Or, if you like, you can give Engine22 a try when we're ready. It has all the classes and functions to deal with shaders VBO's, and so on. But making a terrain would work a bit different here, as you're supposed to split up the world into sectors (think about patches of 100x100 m2 for example). Though not impossible to stilly apply procedural shaders on a flat-quad-grid, things like physics and A.I. routing require the terrain to have a fixed, pre-defined shape (thus as made in your 3D modeller program). But the nice thing about shaping your world that way, is that you can involve bridges, caves, buildings and other complex shapes as well.

Hehe I know what you mean by vodka almost killing you. Back in the day I could drink a lot of this shit but now...I am more into beer or wine.

ReplyDeleteThe thing about my terrain is that I am not making it from a grid of quads and that is worrying me. I know that this is a common approach, you have a heightmap and generating terrain from it. The problem with this approach is that you have zero control over geneated terrain and it does not make any sense and no rivers. Such terrain is good for a flying simulator (remember that voxels from Comanche, that was something! :) ) but not for a RPG game which I am trying to make.

My terrain looks like this: http://s11.postimg.org/61qw3gl9v/Terrain.png

Example handcrafted in Paint :)

I said it is worrying me becuase this is not a common approach and I do not know how to optimise this terrain but I hope that it is possible.

Also, I do not know how to blend water texture with ground texture (sprayed in paint). You say that this needs to be made with shaders right? Would I have to blend those textures for each frame?

>> Water

ReplyDeleteI would render the water as a separate quad (or "river mesh"). That's how most games do it. Terrain & water are separate objects, with different shaders. Water uses very different techniques than terrain.

If you want waves (though that is not that common for a river), you can sub-divide your water-mesh, and let the vertex or tessellation shader calculate wave-heights. For rivers that have a certain flow, it's also common to draw a "flow-texture", which globally gives directions where the wave(texture) should roll to.

A long time ago I wrote some stuff about water FX by the way:

http://tower22.blogspot.nl/2011/05/ou-est-le-swimming-pool-1.html

>> Terrain

How do you make your terrain mesh? In a modeller program?

Tesselation shaders do not require a grid-shaped terrain, it can sub-divide practically anything. But, if you don't have a heightMap or other (noise?) textures, sub-dividing your terrain doesn't bring you much anyway, since you don't have additional height information to make tiny bumps and such.

I would just generate the terrain (and bridges / other stuff) in a 3D modelling program like Blender/Lightwave/Max/Maya, and export it to an OBJ file or something. Since RPG games have huge worlds usually, you should split up your world into sections -or sectors as I'd like to call them. 100 x 100 meter patches for example. In-game, you load & render only the sectors that surround you & are in sight.

For each terrain-sector file, you can also make a lower poly version(s). LightWave for example has plugins where you can tell to reduce to say 25% of the original polycount. So, a distant sector would draw its low-poly version instead, to avoid ending up with too much polygons (but also keep a mind that a modern videocard can do a LOT - if you properly group/sort/batch/instance things).

This is more or less the Engine22 approach by the way, which does the LOD management in loading distant sectors in the background. As for other optimizations, see the reply 2 posts up.

Sorry, I was a little unclear regarding rivers. That was just example of two types of terrain that meet at some point. For example, grass and dirt, or grass and sand, or dirt and snow. Remember heroes of Might and Magic? It had different kins of terrain but at their meet point they blended up nicely. Like at this picture: road is blending up with grass: http://postimg.org/image/rtjyajxbt/

ReplyDeleteI am generating terrain in my own application, since I want the game to be replayable - generated terrain is different at each time. I do it like this:

1. I am generating polygons which are continents, rivers, lakes, mountains. There are flat, just outlines.

2. I tesselate them.

3. I am giving height to each vertex.

In the old days, you could just prepare blended texture for each type of your terrain but I doubt this is possible for my mechanizm. I need to invent something which will go through all my terrain "seams" and blend textures there...

Texture blending usually happens with the help of vertex-weights. Besides a position/normal/texture-coordinate, you can also store additional numbers in your vertex-data (which should be a VBO for the sake of performance).

ReplyDeleteLet's say we use 1 vector4 for each vertex for this. So we have 4 "weights". A fragment-shader can then sample 4 given textures, and mix them like this:

outputColor.rgb =

texture( grass, vertex.texcoord ).rgb * vertex.weight.x +

texture( path, vertex.texcoord ).rgb * vertex.weight.y +

texture( sand, vertex.texcoord ).rgb * vertex.weight.z +

texture( rock, vertex.texcoord ).rgb * vertex.weight.w

;

The same mixing can be done with normalMaps. If 4 textures is not enough, you could add another vector for weights, having 8 textures. Or (better for performance but maybe hard since your terrain is made on the fly), split up the terrain in multiple chunks with different texture-pallettes.

The vertex weights can be painted by hand (but again your terrain is automagically generated), or you base it on procedural algorithms during the "build-stage" that happens when a level is generated.