This doesn't look too complicated, yet it's pretty hard to to draw a volumetric beam if you don't have any polygons that catch light.

Anyway. What's up with volumetric-stuff then? For those who don't know, even though we have 3D games for two decades now, we still suck at rendering *everything* in true 3D. Solid walls or octopus monsters no problem. Fine-particle-based things on the other hand... dust, clouds, farts, fog, mustard gas, smoke, you name it. The problem is that you can't really model those things. Unless your artists are willing to make micro/nano sized particle clouds, and your computer willing to handle huge quantities of tiny “points” in a physical correct way. Nope, the level of detail is way too big, so we fall back on simple hacks to fake such phenomena.

For example, if you look at a lantern during night, you probably see a halo around the lamp. Especially if it's foggy or rainy. God didn't place billboard sprites on street lanterns though. It’s just physics minding its own business. Light rays collide with (water) particles in the air, water breaks (refracts) the light, and you see halo's or even rainbows. If you took attention at physics class, it's not all that hard to understand. But teaching your computer correct physics... There is simply not enough firepower to render on such a fine detail, store all that info, or treat light as rays in general. So instead, we just draw a transparent halo texture and put it around a light. Fixed.

As I'm getting a bit older, I learned not to always pick the hardest and most ambitious paths. If it works, it works. Clients usually don't care how brilliant your code is, and in the end most stuff you see is getting more and more fake anyway. Movie actors spend more time in front of a green screens instead of making an actual stage, soon my 5 year old daughter will Photoshop herself to a 18 year old supermodel on Facebook, mcDonalds meat isn't really meat, and I learned the dinosaurs in Jurassic Park weren't actually real either. And game-graphics are everything except a correct physics lesson.

Some effects can be simulates pretty easy. Others are more complicated. Most modern games have scenes where the sun casts beams through a forest-roof, or where dust appears behind a window as light falls in. Hence that already happened in N64 games probably. Of course they didn't actually render microscopic dust particles. The 3D artist just made a transparent box-shaped volume behind a window. That trick wouldn't work well for a forest though, as you would need to model dozens and dozens of leave shaped "pipes" in your scene. And if the sun moves, all those pipes had to be adjusted as well.

Luckily we found yet another cheap trick to fix that. Wouldn't run on a N64, but a somewhat modern computer should handle it with ease. The idea is to render the sun and maybe a darkened version of the skybox into a (small) texture. Then next step is to draw a screen-filling quad, where each pixel fires a ray towards the sun (it's 2D screen coordinate). For each step towards the lightsource, accumulate the color from the texture made in the first step. Basically it's a reversed way of smearing out blur streaks from a bright spot on your 2D canvas. More details:

Volumetric light as post effect, nVidia GPU Gems 3

Works well for one or a few dominant lights in the background. Doesn't work too well if the lightsource isn't visible directly. Huh? If you walk through a corridor, you might see sunlight falling through the windows, but without seeing the actual sun itself. As soon as the lightsource gets occluded or when you turn your back to it, it won’t get rendered on the input-texture. Thus there is nothing to blur / smear out, and this no lightshafts either. For example, it wouldn’t work for the scene in the picture at the top of this article; the viewer can see the light beam, but not the light that casts it.

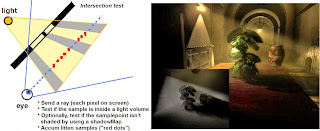

To fix that, yet another trick came by. Nothing new either, although games may still not use it on a wide scale because it's a beefy effect. The idea is that for each pixel on the screen, or at least for each pixel that overlaps a light volume, you send a ray forwards. This ray might intersect the light-volume at some point. If it does, you accumulate a color. To make the effect more impressive, you can test whether that particular point in space wouldn't be shaded by using a shadowMap. This is obviously the more advanced effect, but it takes a lot of sampling, and requires a shadowMap to become really cool.

A basic explanation. It seems more advanced, optimized versions (less sampling) have been made in the meanwhile by smart guys. I didn't read it yet myself, but:

Intel GTD Light Scattering

Well, problem solved, didn't we? Hmmm. I thought so a few years ago when making these effects for the first time, but I always had problems with performance and flexibility. In my screenshots, such effects usually look quite nice. But in reality, when moving around in the scene, the effect could become barely visible or way too visible. Since it's hard to predict how many samples will accumulate light for a certain point of view, the results can vary quite a lot. After some shader- tweaking it would usually look better, but blow up again in a different scene one month later. Or adjustable parameters were hard to understand and still didn't achieve the desired results.

On the bottom-left buffer it seems pretty cool. But in-game it looks as if a honey-bear took a dump in the chandelier. Balancing problem.

Maybe the real problem is that the techniques above are fakes that can only cover one particular effect. Whether to use them or not really depends on the situation. For example, the effect above works for making a color or dust particles INSIDE a lightbeam visible. But in my dusty T22 corridors, I basically need dust everywhere, thus also in area's that aren't directly lit. I would need to complement the effect described above with another one. For example by filling the whole area with dust-sprites that slowly fly around.

The point is, each situation differs and asks for different techniques. Sometimes advanced shaders, sometimes cheap tricks that your grandpa invented already. Being ambitious (and younger), I tried to make a single multi-functional shaders that would solve all my "volumetric needs", but that didn't work out. Way too much overhead, too little artistic control, and tweaking it was like Jenga. Insert a block to improve effect A, and it will screw up effect B as another block falls out on the other side. So, I decided to disassemble again into multiple, simpler effects again. Let the artist decide what is needed for scenario X. It feels a bit unnatural though. Game engines try to solve more and more graphical issues with realtime, dynamic, uniform "super-shaders" rather than giving a bunch of dirty tricks.

I wonder how modern engines like UDK or CryEngine deal with this. Does the artist just click a button to get instant-awesomeness (whether that is a volumetric fog field, nice lighting & G.I., or a reflective flooded scene), or does it also involve a lot of parameter tweaking, masking ugly corners, and messing around with multiple techniques to see what works best? It's easy to get fooled by screenshots or carefully scripted movies. Like I said, I've become Goebbels when it becomes to showing you the better looking parts of the game. And so are they.

Well, after the latest adjustments, I should be happy again with the volumetric effects for about 3 months. Then I probably get jealous again after seeing a picture or game that seems to do it in a better or smarter way. The graphics-programming lifecycle in a nutshell.

No comments:

Post a Comment