In this case, the environment has to look freezing cold. Otherwise the water wouldn’t freeze right? A few tricks you can use for that:

- Use a grayscaled / blueish screen filter. Blue looks cold.

- Use blueish or gray-white fog

- Put snow / ice on all surfaces, not just a few pieces of ice on the water

- Make windy snowdust particles (nearby air-vents and such)

- Let is snow, let it snow, let it snow

Still got to learn those little fuckers not to fall through the ceiling. Maybe the depth-texture (from the player point of view) may help...

Since the summer here was one big fiasco (Global Freezing), I’m already in a Christmas mood. So I decided to start with some snow. But as usual, it required a whole lot more to implement a solid basis to make such effects. What we needed was a particle generator (and an editor to define them).

Now Engine22 already had a few techniques, but not flexible enough for Micheal Jackson moves, and certainly not fast enough to spray massive amounts of particles. And when it comes to particles, density does matter. Usually 5.000 smaller dots make a better volumetric shape than 5.00 somewhat bigger dots. Time for a revision on the old, CPU-based, particle code.

For starters, particles is nothing more than a “cloud” of (small) sprites that move around in a certain way. Simple examples are bloodsprays or flying debris after bullet impacts. But it’s also useful for complex volumetric shapes such as fire, smoke, gas, clouds or a water fountain. The power of particles is the simplicity to draw them, which allows to render large numbers that form a shape together. A single particle looks like shit, but as with ants, killer bees or football hooligans, the group dynamics make it a strong whole.

A particle can be a simple dot, or a textured billboard sprite. The simpler, the faster of course. But you can also apply lighting & shadowMapping on your particles to create cool effects such as lightshafts. Or let it bend / blur the background to create a heat haze for example. Same stuff as usual. More difficult is to render it in insane quantities, and to update particle positions in a realistic way. How smoke moves through the air? Well, I can’t tell you really. But at least I can share some methods to render large numbers of particles.

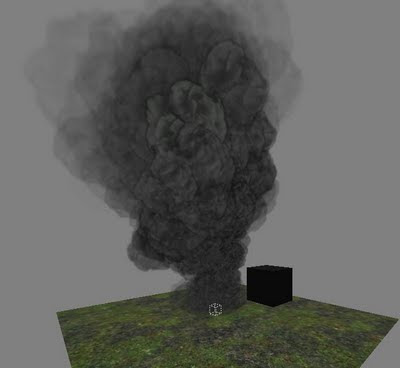

Smoke test - You'd better not try to model that in real 3D... And a single animated 2D sprite won't do the trick neither anno 2011...

Transform-Feedback (Vertex Streaming)

The simple way of doing particles is creating a big array of particle-structs. Let the CPU loop through the array, update the position with gravity or whatever forces, then render it as a sprite. Not bad, but your CPU will cripple when doing really large numbers (ten thousands). The GPU on the other hand… Wouldn’t it be nice to benefit from the GPU math skills and having all vertex data loaded on the GPU already, rather than passing thousands of coordinates? Think in magnitudes of hundred-thousands of particles, rather than thousands. Well, in theory that is. Having a lot of layers of transparent quads filling the screen can still cause fill-rate issues.

Maybe you know how to pass a VBO with vertex data to the GPU, but how to update the particle positions and store them back in a VBO without needing the CPU? Transform-Feedback is the magic OpenGL word. You don’t need that freaking CPU, neither clumsy textures to store data in pixels.

0. Create a VBO with vertex-data (positions, maybe texcoords/colors, or force vectors in case of particles).

1. Make a Vertex-Shader that updates the vertex data (positions, forces)

2. Store the Vertex-Shader output directly back into a second VBO (= Transform Feedback)

3. Render your particles (or cloth, or skinned character, or anything else) with the second VBO as input. Eventually use a geometry-shader to make billboards out of single vertices.

4. Next cycle, use the second VBO as input in step 1 and store results in the first VBO. Toggle for each cycle.

Dance dammit

So, as for particles, you can create a VBO that contains the maximum amount of particles. In case you can spawn up to 10.000 particles, then create a VBO with 10.000 points. Since geometry shaders can produce sprites out of a single vertex-position, you don’t need to store all 4 corner coordinates in that VBO. Asides positions, you probably need some more info to store per point. For example:

TVBO_Particle = packed record

......Position : TVector4f; // XYZ, W=scale

......Force : TVector4f; // Current velocity / force vector, W=lifetime

......Color : TVector3f; // Color modulator

......LookupCoords : TVector4f; // Pick a sub-texture from a bigger atlas texture

......Randoms : TVector4f; // Some more random numbers to vary data

End;

When creating the VBO, you only have to fill the static data; properties that won’t change such as the color, size lookup coordinates and random numbers. The dynamic properties such as position, lifetime and force will be updated in the Vertex-Shader that updates the VBO each cycle. A very simple approach could be:

OUT.Force.xyz = IN.Force.xyz + Gravity.xyz * cycleDeltaTime;

OUT.Force.a = IN.Force.a - cycleDeltaTime; // Decrease lifetime

OUT.Position.xyz = IN.Position.xyz + Force.xyz; // Update position

Go Recycle

Right, this will make your vertex fall down. At some point, it hits the ground or it should simply vanish after X seconds. Then what? You can’t create new vertices in a VBO… But you can recycle them of course. Notice I used “force.a” as a lifetime value:

If ( ( OUT.Force.a < 0.f ) || (OUT.Position.y < floor.y))

{

......// Particle ran out of time, or hit the ground

......// Respawn it (eventually with a delay)

......OUT.Force.a = maxParticleLifeTime * randomFactor;

......OUT.Force.xyz = initialParticleForce;

......OUT.Position.xyz = initialParticlePosition.xyz;

}

It will start over again next cycle. Probably you want to vary the initial properties a bit to prevent all particles starting at exactly the same point, with the same forces. Use some shader-math, input parameters, or like me, use a lookup texture. Depending on the overall time, you can pick from a texture that tells where to place it, what force to use, what color to use, and so on.

tx.x = elapsedTime / totalGeneratorLifeTime;

OUT.Position.xyz = generatorCenter.xyz + tex2D( dataTexture, tx );

You can also use such textures to morph the color over the time, or to fade them out after a while. Whatever you like Mac. In case of complex movement such as dancing Plutonium molecules, or a tornado, you can eventually encode an entire movement pattern in a 2D or 3D lookup texture. Use your creativity!

I still lack textures and finished shaders to show some cooler effects, but you can also use particles for tornados, rain, lava, flies circling around a turd, slime dripping from leaking pipes, electicity sparks, water splashes, fire, weather effects, plasma generators, exhaust pipes, and so on.

Make it quads please

For simplicity sake and to reduce the VBO size with 75%, we only store 1 vertex struct per particle. That means you can’t just render the damn thing. That’s where geometry shaders (finally) become useful. Given the camera matrix, particle position, and particle size, you can let it make billboards that always face the camera. Here some code:

POINT TRIANGLE_OUT

void main( AttribArrayiPos : POSITION,

uniform float3 camPos , // camera position

) {

// Make quad-sprites from points

float4 p1,p2,p3,p4;

float3 particleCenterPos = iPos[0].xyz;

float size = iPos[0].w; // Stored size here

float3 vAt = particleCenterPos.xyz - cameraPos.xyz;

vAt = normalize( vAt );

float3 vRight = cross( float3( 0.0, 1.0, 0.0 ), vAt );

float3 vUp = cross( vAt, vRight );

vRight = normalize( vRight );

vUp = normalize( vUp );

float3 dir1 = (-size * vRight.xyz) + ( +size * vUp.xyz );

float3 dir2 = (+size * vRight.xyz) + ( +size * vUp.xyz );

float3 dir3 = (-size * vRight.xyz) + ( -size * vUp.xyz );

float3 dir4 = (+size * vRight.xyz) + ( -size * vUp.xyz );

p1 = mul( glstate.matrix.mvp, float4(particleCenterPos.xyz + dir1,1 ) );

p2 = mul( glstate.matrix.mvp, float4(particleCenterPos.xyz + dir2,1 ) );

p3 = mul( glstate.matrix.mvp, float4(particleCenterPos.xyz + dir3,1 ) );

p4 = mul( glstate.matrix.mvp, float4(particleCenterPos.xyz + dir4,1 ) );

// Don't forget the texcoords

const float2 tx1 = { 1,0 };

const float2 tx2 = { 0,0 };

const float2 tx3 = { 1,1 };

const float2 tx4 = { 0,1 };

emitVertex( p1 : POSITION, tx1 : TEXCOORD0 );

emitVertex( p2 : POSITION, tx2 : TEXCOORD0 );

emitVertex( p3 : POSITION, tx3 : TEXCOORD0 );

emitVertex( p4 : POSITION, tx4 : TEXCOORD0 );

restartStrip();

}

Transformers!

Nice and all, but how to use transform-feedback? Here some directions. Not sure if this also works on ATI/AMD cards though…

// Specify target for transform feedback

// Toggle between 2 vbo’s

glBindBufferOffsetNV(GL_TRANSFORM_FEEDBACK_BUFFER_NV, 0, particleVBO [0 or 1], 0);

glBeginTransformFeedbackNV( GL_POINTS );

glEnable(GL_RASTERIZER_DISCARD_NV); // disable rasterization ( = no pixel output to textures/screen)

// Apply shaders & draw the VBO

render_ParticleVBO;

glDisable(GL_RASTERIZER_DISCARD_NV); // Return normal modus

glEndTransformFeedbackNV();

As for the VBO creation, here is one way:

glGenBuffersARB( 2, @particleVBO[0] ); // Make 2 buffers

for j:=0 to 1 do begin

glBindBufferARB( GL_ARRAY_BUFFER_ARB, particleVBO [j] ); // Set target

// Pump array of structs into the VBO

glBufferDataARB( GL_ARRAY_BUFFER_ARB, self.props.maxParticles * sizeof(TVBO_Particle), @part[0], GL_STATIC_DRAW_ARB );

// Don’t forget, specify which attributes to store

glTransformFeedbackAttribsNV(5, @attribs[0], GL_INTERLEAVED_ATTRIBS_NV);

end; // for j

glBindBufferARB( GL_ARRAY_BUFFER_ARB, 0 ); // unbind

Final notes

VBO’s & Transform feedback allows to render lot’s more particles. That still doesn’t fix all problems though. First of all, the performance will still decrease rapidly when looking into a dense cloud of particles where the quads overlap lot’s of times (meaning each pixel gets overdrawn many times).

Then there is depth-sorting. No matter how many particles you use, if your front particles mask the ones behind, it still doesn’t pay off. When using additive or multiply blending methods, this is not a problem. Though you may risk to get extreme bright/dark results as soon as particles overlap on the screen. When using normal transparency, you still need to sort things out. I believe there are methods to sort on the GPU as well, but I never really looked at them yet. Another way might be using multiple particle generators, sort them on the CPU, then render them all to get a combined result.

Finally, I’m not so sure if this technique is also advisable for small amounts of particles. For example, when shooting at a wall, you may only need a handful of debris particles flying around. Setting up a VBO takes some extra time, so either use the good old CPU approach, or use a fixed set of premade particle generators.