Writer, Brian

Our Hank Moody from California. Has many years of experience on several projects, studied Anthropology (very useful for background research), had a career as musician, and is currently writing a novel. Find out more at this blog:

brianlinvillewriter

Sound & Music, Zack

DJ Zack found some free hours to put in this project. From threatening music to flushing toilet sound effects. Already did the audio before for some (horror movies)!

Soundcloud

3D (Character) modeler, Julio

His surname and Spanish background makes him sound as a hot singer, but the man is actually stuffed with horrific rotten ideas + the skills to work them out. Mothers, watch your daughters when they come home with a Spaniard!

Now that there is a small team, we can focus on the next targets… Such as fishing, paintball, playing board games, and other cozy group events. Ow, and maybe we’ll make a second demo movie as well…

The couple of votes on the Poll show that there is an interest for showing some more technical details. Hmmm… not completely surprising with the Gamedev.com background. Now I won’t turn this blog into something like Humus3D or Nehe. No time, and there are far better resources on the internet for learning the theory or implementations. However, I can write more details about the upcoming techniques that are implemented in the engine of course.

To start with some adjustments on the Rendering Pipeline. As you may have read before, the engine currently uses Deferred Rendering. I’ll keep it that way, but with some tricks lent from another variant: “Inferred Rendering”. In case you already know what Deferred Rendering is, you can skip this post. Next week I’ll be writing about Inferred Rendering. Otherwise, here’s another quick course. I’ll try to write it in such a way that even a non-programmer may understand some bits. Ready? Set? Go.

==========================================================

Good old Forward Rendering:

Since Doom we all know that lighting the environment is an important, if not the most important way to enhance realism. Raytracers try to simulate this in an accurate way by firing a whole lot of photons through the scene to see which ones bounced into our eyes. Too bad this still isn’t fast enough for really practical usage (although it’s coming closer and closer), so lighting in a game works pretty different from reality. Though with shaders, we can come pretty far. In short:

For all entities that need to be rendered:

- Apply shader that belongs to this entity

- Update shader parameters (textures, material settings, light positions…)

- Draw entity geometry (a polygon, a box, a cathedral, whatever) with that shader.

The magic happens on the videocard GPU, inside that shader program. Basically a shader computes where to place the geometry vertices, and how to color it’s pixels. When doing traditional lighting, a basic shader generally looks like this:

lightVector = normalize(lightPosition.xyz - pixelPosition.xyz );

diffuseLight = saturate( dotProduct( pixelNormal, lightVector ) );

Output.pixelColor.rgb = texture.rgb * diffuseLight.rgb * lightDiffuseColor.rgb;

There are plenty other tricks you can add in that shader such as shadows, attenuation, ambient, or specular light. Anyway, as you can see you’ll need to know information about the light such as its position, color and eventually the falloff distance. So… that means you’ll need to know which lights affect the entity before rendering it… In complex scenes with dozens of lights, you’ll need to assign a list of lights to each surface / entity / wall or whatever you are trying to render. Then the finally result is computed as follow:

....pixelColor = ( light1 + light2 + ... + lightN ) * pixelColor + … other tricks

- Enable additive blending to sum up the light results

- Apply lighting shader

- For each entity

Pass entity parameters to shader such as texture

For each light that affects entity

Pass light parameters to shader (position, range, color, shadowMap, …)

Render entity geometry

This can be called “Forward Rendering”. Has been used countless times, but there are some serious drawbacks:

- Sorting out affected geometry

- Having to render the geometry multiple times

- Performance loss when overdrawing the same pixels

First of all, sorting out which light(s) affect, let’s say a wall, can be tricky. Especially when the lights move around or can be switched on and off. Still it is a necessarily, because rendering that wall with ALL lights enabled would be a huge waste of energy and probably kill the performance straight away as soon as you have 10+ lights. While surfaces are usually only affected by a few lights only (directly).

In the past I started with Forward Rendering. Each light would sort out it’s affected geometry by collecting the objects and map-geometry inside a certain sphere. With the help of an octree this could be done fairly fast. After that I would render the contents per light.

Another drawback is that we have to render the entities multiple times. If there are 6 lights shining on that wall, we’ll have to render it six times as well to sum up all the individual light results… Wait… shaders can do looping these days right? True, you can program a shader that does multiple lights in a single pass by walking through an array. BUT… you are still somewhat limited due the maximum count of registers, texture units, and so on. Not really a problem unless you have a huge amount of lights though. But what really kills the vibe is the fact that each entity, wall, or triangle could have a different set of lights affecting it. You could render per triangle eventually, but this will make things even worse. Whatever you do, always try to batch it. Splitting up the geometry into triangles is a bad, bad idea.

Third problem. Computers are stupid, and so if there is a wall behind another one, it will actually eventually draw 2 walls. However, the one on the back will be (partially) overwritten by pixels from the other wall in front. Fine, but all those nasty light calculations for the back-wall were a waste of time. Bob Ross paints in layers, but try to prevent that when doing a 3D game.

There is a fix for everything, so that’s how Deferred Rendering became popular since, let’s say 5 years. The 3 problems mentioned are pretty much fixed with this technique. Well, tell us grandpa!

Deferred Rendering / Lighting:

The Deferred pipeline is somewhat different from the good old Forward one. Before doing lighting, we first fill a set of buffers with information for each pixel that appears on the screen. Later on, we render the lights as simple (invisible) volumes such as spheres, cones or screen filling quads. These primitive shapes then look at those “info-buffers” to perform lighting.

Step 1, filling the buffers

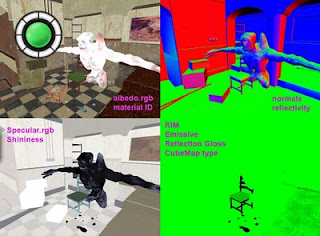

As for filling those “info-buffers”… With “Render Targets” / FBO’s, you can draw in the background to a texture-buffer instead of the screen. In fact, you can render onto multiple targets at the same time. Current hardware can render to 4 or even 8 textures without having to render the geometry 4 times as well. Since textures usually can hold 4 components per pixel (red, green, blue, alpha) you could write out 4x4 = 16 info scalars. It’s up to you how you use them, but my engine does this:

There are actually more sets of buffers that hold motion-vectors, DoF / SSAO info, fog settings, and very important; depth.

Howto render to multiple targets?

- Enable drawing on a render target / FBO

- Enable MRT (Multiple Render Targets). The amount of buffers depends on your hardware.

- Attach 2,3 or more textures to the FBO to write on.

- Just render the geometry as usual, only once

- Inside the fragment shader, you can define multiple output colors. In CG for example:

Out float4 result_Albedo : COLOR0,

Out float4 result_Normal : COLOR1,

Sorry for this outdated shot again, but since I can't run the engine at this moment because of Pipeline changes, I wasn't able to make new shots of course. By the way, the buffer contents have changed since my last post about Defererd Rendering, as I’m adding support for new techniques. Anyhow, here is an idea what happens in the background.

Step 2, Rendering lights

That was step 1. Notice that you can use these buffers not only for (direct) lighting. Especially depth info and normals can be used for many other (post) effects. But we keep it to lighting for now. Instead of sorting out who did what and where, we purely focus on the lights themselves. In fact, we don’t render any geometry at all! That’s right, even with 5 billion lights you only have to render the geometry once in the first step. Not entirely true if you want translucent surfaces later on as well, but forget about that for now…

For each light, render it’s volume as a primitive shape. For example, If you have a pointlight with a range of 4 meters on coordinates {x3, y70, z-4}, then render a simple sphere with a radius of 4 meters on that spot. Eventually slightly bigger to prevent artifacts at the edges.

* Pointlights --> spheres

* Spotlights --> cones

* Directional lights --> cubes

* Huge lights(sun) --> Screen quad

Asides from the sun, you only render pixels at the place where the light can affect the geometry. Everything projected behind those shapes *could* be litten. In case you have rendered the geometry inside this buffer as well, you can tweak the depth-test so that the volumes only render at the places where it intersects the geometry.

Now you don’t actually see a sphere or cone in your scene. What these shapes do is highlighting the background. Since we have these (4) buffers with info, we can grab those pixels inside the shader with some projective texture mapping. Then from that point, lighting will be the same as in a Forward Renderer. You can also apply shadowMaps, or whatsoever.

< vertex shader >

Out.Pos = mul( modelViewProjectionMatrix, in.vertexPosition );

// Projection texture coordinates

projTexcoords.xyz = ( Out.Pos.xyz + Out.Pos.www)*0.5;

projTexcoords.w = Out.Pos.w;

< fragment shader >

backgroundAlbedo = tex2Dproj( albedoBuffer, projTexcoords.xyzw );

backgroundNormal = tex2Dproj( normalBuffer, projTexcoords.xyzw );

result = dot(backgroundNormal.xyz, lightVector ) * backgroundAlbedo;

Since every pixel of a light shape only gets rendered once, you also fixed that “overdrawing” problem. No matter how many walls there are behind each other, only the front pixel will be used.

Thus, no overdraw, only nessesary pixels getting drawn, no need to render geometry again for each light… One hell of a lighting performance boost! And probably it results to even simpler code as you can get rid of sorting mechanisms. You don’t have to know to know which triangles lightX affected, just draw its shape and the rasterizer will do the magic.

All in all, Deferred Rendering is a cleaner and faster way to do lighting. But as always, everything comes at a price…

- Does not work for translucent objects. I tell you why next time

- Filling the buffers takes some energy. Not really a problem on modern hardware though.

- Dealing with multiple ways of lighting (BRDF, Anisotropic, …) requires some extra tricks. Not impossible though. In my engine each pixel has a lighting technique index which is later on used to fetch the proper lighting characteristics from an atlas textyre in the lighting shader.

Does that “Inferred Rendering” fix all those things? Nah, not really. But I have other reasons to copy some of it’s tricks. But that will be next time, Gadget.