T22 uses a technique that has no name, because I made it myself (though others probably tried similar things). Or well, I copied ideas from existing techniques, mangled it with my own countless attempts, used some other advice, and added some poohah on top. But if I had to give it a name... "Ambi-Volume-Texture-Cone-Tracing"? Doesn't sound very neat... Anyway, let's explain how it works. Oh, and don't expect a perfect solution. It doesn't look as good as Crassin's VCT (Voxel Cone Tracing), although you could say they "cheated" a bit with powerful computers and a simple scene. Sponza Theatre a simple scene?! Sure, it has quite a lot polygons, but it doesn't have very thin walls (sensitive for light-leaks), and above all, it's just a single scene. In practice, game-worlds are much bigger. For example, you can't bake a whole GTA city into the (GPU) memory, so you have to chop the world in chunks and only process the geometry that is nearby the camera. That is possible with VCT, but updating the Octree that is required for this technique is very expensive.

In practice, all realtime G.I. solutions have problems. Either they won't work very well with moving objects (imagine a door closing & blocking light) / destructible geometry, they only work on fixed small worlds, they require too much power, lack accuracy, or just look terrible. Or all of that. Pre-calculated solutions on the other hand are much faster and offer better quality, but well, they aren’t realtime. That means the lighting won't adapt for shit when the environment or lamps change. Unless you exactly know which lights will change and pre-calculate multiple situations. Don't know how GTA V handles G.I., but I can imagine they bake the lighting for a couple scenario's (day, night, cloudy, ...), based on dominant lightsources such as the Sun, Moon, and pre-defined (street)lights.

For T22 I'm urged to fall back on pre-baked lighting as well. Simply because it looks better, performs better. And an often forgotten argument; it gives a better degree of control. Especially in an unreal horror game like this, you want to play with atmospheric settings. Make rooms much darker than they should be, use high contrasts, or add a stinky orange ambient to a corridor while there are no orange lamps at all. With realtime G.I., it's hard to tell what the result will be exactly. Especially if the results aren't exactly 100% accurate either. Sometimes it looks good, sometimes it doesn’t and you’ll be adding cheap tricks, completely bypassing the (expensive!) G.I.. But nevertheless... making realtime G.I. for games is as attractive as finding the magic recipe for Gold. It's a prestige thing.

Sponza Theatre, not rendered but a real photo for a change. Notice the whole structure is litten more or less, even though the light only comes in via the open roof. That's the kind of lighting we want to achieve. Realtime.

Not quite yet the real thing, but still one of the most promising realtime G.I. attempts so far, Voxel Cone Tracing by Cyril Crassin. Seems UDK 4 is adopting this technique as well.

Voxel-Cone-Tracing convinced me again that realtime G.I. is possible, and with good results. So I gave it a try. The idea in a nutshell:

1- Voxelize geometry - put geometry in octree (in GPU memory, using Compute Shaders)

2- Light voxelized geometry - add outgoing light in octree cells

3- Mipmap octree

4- For each pixel on the screen, sample bounced light with a couple of cone-shaped rays from the mipmapped octree

1- Voxelize your geometry

Like making a lower-resolution Lego/Minecraft block variant of your scenery & objects). Store those blocks in an Octree. Octrees provide storage and searching trees for 3D data. To avoid needing tons of memory, the octree will reduce resolution over distance (thus bigger blocks for distant geometry).

Excuse the lousy drawing, I was too lazy to get the perspective right. But you can see how a scene would get "voxelized" roughly. By rendering the "whole" (whatever could affect the GI in your view) scene in slices, you can get the whereabouts of your geometry and their properties such as normal and diffuse/specular/emissive colors. Note that I skipped the plant leafs and puppet. Of course you can voxelize those too, but be aware! It would constantly change the Octree as the puppet moves.

Why Voxelize & Octrees? VCT is based on Raymarching. Any given pixel on the screen will sample incoming light by sending some (cone)rays into the scene. We need to check where our rays intersect geometry. Octrees are a sufficient way to quickly test collisions. Memory is limited though, so we can't store each and every tiny molecule. Instead we store bigger blocks and use an octree so we only reserve memory for locations that actually contain geometry, rather than each and every cubic meter. Once we have an Octree, we can insert geometry data, light fluxes, and other data we may need to know later on.

2- Compute the direct lighting on those voxels

As you would normally apply lights on 3D stuff, you compute the direct lighting for every voxel. You calculate how much light a voxel would reflect back in the world ("diffuse light"), so another surface can pick it up again (indirect lighting).

Papers never explain how they handle big amounts of light, usually you only see 1 or 2 big lightsources, that stupid Cornel box, or an outdoor scene with the sun only. But you could handle multiple lights of course, and the good news is that there are relative little voxels (unless you use very fine resolutions). For each octree cell that contains geometry, you calculate the incoming fluxes from the 6 main directions. Eventually you could use Spherical Harmonics to reduce the amount of floats needed to store this information. So, now your octree cells contain this info: geometry yes/no (or density%)? Diffuse RGB reflected into the -X, +X, -Y, +Y, -Z and +Z directions. In other words, you have a low-res representation of your world, directly lit, into your video card memory. This is updated each cycle btw.

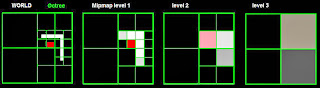

3- Mipmap the octree

On a 2D texture, Mipmapping means you half the size and for each new pixel you take the average of 4 pixels from the original resolution. On a 3D texture, the idea is the same except that you take pixels from layers above and/or below into account as well. And well, you could do the same for an octree. When constructing an octree, you subdivide a cell into 8 smaller cells if it contains anything of interest. So you can do the reverse thing as well. 1 to 8 Minecraft blocks get replaced with 1 bigger Minecraf blocked, made of the averages.

Why we need to Mipmap? So we can "cone trace". See next step.

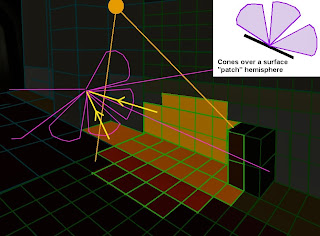

4- For each pixel on your screen, sample indirect light with Cone Tracing

The key of G.I. is to gather light coming from all directions, bounced off by other surfaces. Simple theory, but extremely hard to do in realtime. A major problem is that we need to sample light from an infinite amount of directions. Imagine you were a piece of concrete wall; everything you can see around you is reflecting light towards you.

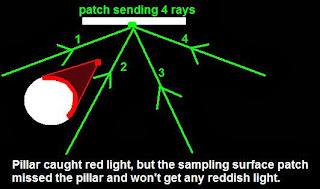

At least every pixel on your screen needs to gather this information, but we can only use a very limited amount of rays per pixel, as raytracing or raymarching is expensive in general. Just using 16 rays for example will lead to “undersampling”, as you might have missed vital parts from the surrounding scene.

We can reduce this a bit by letting each pixel look in slight different directions and blur the end-results. But the results are still grainy (as you often see with older 3D software), and you need an awful big fucking amount of rays to get real proper results --> slow.

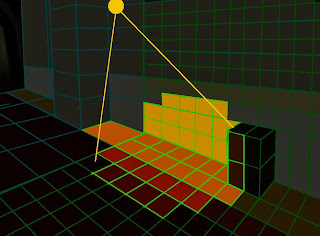

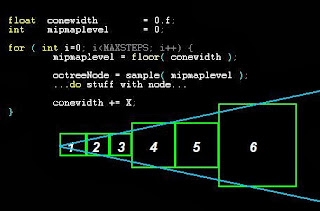

The idea behind Cone-Shaped rays is that you only need a few rays to sample a lot. For each couple steps that the ray marches forward, we look into a lower resolution of the mipmap we made in step 3. So, as we travel further and further, we sample averaged light from bigger blocks in the octree. Of course, the accuracy will suffer, but it's an efficient way to avoid missing important lightsources & win speed. After all, we need a realtime solution, and big speed drops won't justify the few benefits we gain compared to much faster(and nicer, and easier) pre-baked solutions. Plus, fortunately, G.I. means "low frequency lighting" , so the result is a blur of many incoming light fluxes anyway. For an ordinary spectator, it’s hard to figure out how G.I.should look so its forgivable to make errors… usually.

By sampling from lower (averaged, blurrier) levels over time, we get a cone shaped ray.

So, for each pixel on the screen will fire a couple of those "cones". We check where the cones hit geometry in the mipmapped octree, and then sample the light that was bounced into our (global) direction. The advantage of screenspace techniques is that we sample for all pixels visible on the camera; nothing more, nothing less. Eventually we can do it on a lower resolution to get a significant speed gain. And then upscale the image with some smart blurring finally. Another advantage is that any pixel on the screen can gather light, including objects that weren't involved in the GI pipeline so far.

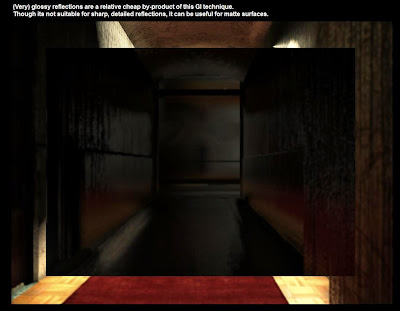

Glossy reflections

A cool feature of VCT is the ability of adding 1 extra ray to sample specular lighting, giving glossy reflections. The cone-angle would depend on the material glossiness. Highly specular surfaces would use very narrow cones, meaning that we keep sampling from the higher-resolution mipmap levels for a longer time, leading to less blurry results.

T22 doesn't use VCT, but the same technique can be used for sampling glossy reflections. Advantage is that it works on any surface, and it can sample behind the camera. Disadvantages are the low level of detail and the lack of G.I. in the portion that gets reflected; voxels that were not directly lit, won't appear in the reflections either.

================================================================

T22 Adjustments

Cool and the Gang. But I said T22 doesn't exactly use VCT. What is wrong with it then? Well, 4 things:

A: The octree requires a lot of memory (you can win a lot by reducing the level of detail though)

B: Updating the octree is very costly

C: Traversing the octree to sample data during the raymarch isn’t that fast

D: Goddamn difficult to implement correctly. I might be too dumb to do it all right.

The Octree is needed to store the world representation, required for raymarching and gathering light. It can help speeding up the raymarching, and moreover, it’s pretty much the only way to store a lot of geometry up to a relative detailed level. But at the same time, this octree is the Achilles heel of VCT, coding it is nasty, and maintaining the octree is expensive, especially when having to deal with big, roaming worlds. Another in-detail issue is sampling. When doing ray-marching, we have to dive into the octree and check from which cell we are sampling on any given XYZ position. It’s not that bad, but there are faster ways.

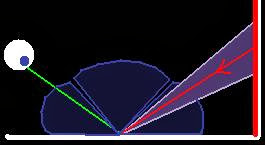

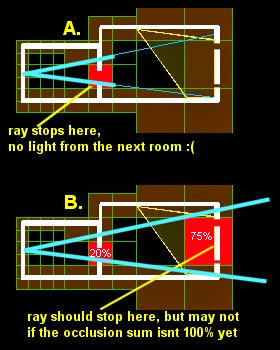

When I finally got VCT "working" in T22, the framerate crumbled from a lousy ~18 fps to a terrible ~4 fps. Some nuance, my code isn't very well optimized probably, and it runs on an old 2008 laptop. Anyhow, such a low framerate is unacceptable, and the results weren't exactly great either. The problem arises when mipmapping. Tiny blocks are combined into bigger blocks, but what if 50% blocks are "vacuum" (not filled with geometry), and the other half are walls, floors, or whatever? I decided to give a density value to cells. Now later on, when raymarching, you have to decide where and when to sample. If we hit a 50% occluding block, should we immediately stop sampling, or continue until the sum of occlusions is 100%? The first option prevents light-leaks, but it doesn't work very well in narrow environments. Rays will get stopped at narrow passages (doors, windows, ...), so light from a bright corridor won't get into a darker room. That is exactly NOT we want to achieve, G.I. should bring light to darker places!

Option B isn't optimal either, as it may happen we stop too late (or not at all), and thus gathering light from places we can't actually see. The VCT demo's probably hides this by using a high resolution Octree, sufficient rays per pixel (the less narrow the cones, the less errors), and who knows what else.

Got to mention that the same light-leaking problem occurs in pretty much any grid/cell based solution. CryEngine "Light Propagation Volumes" has this problem, and so does T22's "Ambi-Volume-Texture-Tracing" or whatever you want to call it. At most times, this isn't that much of a problem since it's hard to tell whether the G.I. is incorrect anyway. But at some points, it leads to undesired situations. As said before, you have little control over realtime G.I. so masking such errors can be nasty. And if we are so busy with powders and creams to hide ugliness... shouldn't we just drop the whole technique then? I wouldn't be surprised if games like Crysis actually (partially) did.

Anyway, what we did do in T22, is simplifying the whole VCT process and getting rid of octrees. I picked up something I did before, voxelizing the world into 3D textures instead of a complicated octree, and then adopted the cone-trace idea to fix undersampling issues by mipmapping the 3D textures. So the pipeline is pretty much the same as VCT:

1- Voxelize geometry

2- Light voxelized geometry - put results in 3D textures

3- Mipmap 3D textures

4- For each pixel on the screen, sample bounced light with a couple of cone-shaped rays from the mipmapped 3D textures

A whole lot of effort for a bit of blurry light smeared all over the corridors...

The advantage of 3D-textures is that you can directly insert voxels on the right places bby simply using their 3D world positions. Same thing for reading the textures, all we need is a coordinate. And because the march sequences usually read pixels located at the same spots, we benefit from hardware caching. Raymarching through a 3D texture is faster than traversing an octree, and much easier to work with. But it also has disadvantages: 3D-textures consume a lot of memory. This will force you to reduce the level of detail (thus bigger voxel lego blocks, less sharp reflections, bigger hit detection inaccuracies), and keep the boundaries close. That means the 3D textures will only cover a limited (cubic)space around the camera. Distant geometry must fall back on other GI methods.

Likely, 75% or more of your world will be vacuum, unfilled space. With octrees, you don't sub-divide cells that don't contain any geometry, keeping the memory consumption low for open spaces. With 3D textures, each cubic meter will require the same amount of pixels, whether it’s filled with geometry or not. Could be devastating for large/outdoor scenes, but note that you can stretch 3D textures over a wider space if needed. When the geometry is stretched over a wider area, you can generally do with a coarser grid as well.

Well, T22 will be indoor mostly, so we aren't dealing with huge spaces. Yet it's still possible we may look beyond the area covered by the 3D textures. So what I did is baking the GI into the geometry vertices (note that the world is tessellated to some degree). Lower-end computers or distant geometry will use the pre-baked GI. And otherwise we compute it realtime. Or both. Medium-end settings will add a realtime bounce with a pre-baked bounce. So that makes it half realtime. Or something. Sounds cheap maybe, but hey, got to think of something. Also VCT would eventually bump to its limits, as you can't make a super-octree that covers the entire world. I believe the relative small Sponza Theatre uses many hundreds of megabytes (or even over a gig) video memory already, though that is a highly detailed octree. Anyhow, you need a back-up (also counts for Light Propagating Volumes).

Another change, or actually cheap hack, I made was in the voxelizing process. Rather than voxelizing the scene each cycle, I pre-calculate the voxels for all geometry. Each chunk of the world has its own array of pre generated voxels (voxel = a position, color, normal, ...). Also dynamic objects have pre-calculated voxels. As the object moves or rotates, the voxels will transform with it. Note that small objects don't have voxels, only objects big enough to really make a difference in the lighting, or doors that block light from another room.

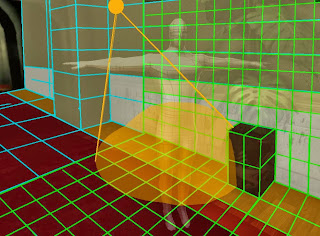

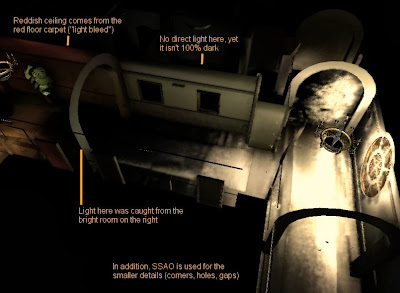

The final raymarching step works pretty much the same as in VCT, except that we sample directly from 3D textures (much simpler). Each few steps we march, we sample from a lower detailed mipmap level to get the cone-effect. And we can also make glossy reflections if we like, although the sharpness isn't as great as VCT's, since the 3D textures contain less detail than octrees. Despite the speed gains, this process still requires a lot of horsepower. So I do it on a much lower resolution. The situation is somewhat workable on my old laptop, but the result is too blurry. You don't see it that much in bright areas, but darker parts that don't catch direct light are smudgy.

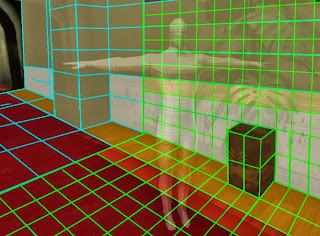

Note how the red carpet reflects on the walls. Another nice thing about screenspace techniques is that it will also work naturally for decals we pasted on top of the geometry, such as the top-right hole.

Too bad the buffer is blurry though. Possibly I could get better quality by using a smarter upscale process (UDK does that for their VCT variant), but I'm more hoping my next computer can cope with a higher resolution.

================================================================

Conclusion

The conclusion is that I don't have a conclusion yet. The results I have now are the best I had so far, but still crappy compared to a good pre-baked result. The 2 biggest problems are the blur in dark area's (I already smell the complaints when the next demo will be released), and light-leaks + other imperfections. The results are sometimes unpredictable / incorrect. In other words, I still have to add secondary lights and other tricks to get the lighting as desired. Probably I can fix some bugs with fine-tuning. And the blur can be reduced by using a higher resolution for the final screenspace gathering step. My Desktop is dead so I haven't tried T22 on a faster computer for a year, but another guy from the team had proper framerates (40+), so I expect a modern computer can do miracles when it comes to reducing the blur. It’s very necessary, because despite high-res textures, T22 isn’t sharp compared to most modern games. The G.I. and somewhat poor SSAO are part of the cause.

The real question is, how hard does T22 really need realtime G.I.? Indoor area's aren't much affected by day/night cycles, you won't destroy walls, and there is little movement in general. On the other hand, typical dark horror area's may benefit from full dynamic lighting when playing with flashlights, damaged lamps, or torches. The new UDK engine (which uses VCT or some sort) seems promising when it comes to G.I. but I still wonder how good it really is. Is the effect correct enough, fast enough, flexible enough, and adjustable enough to be used in any situation? I doubt it. Besides, the goal of T22 shouldn't be beating a UDK or Crysis engine on tech features, because that is never gonna happen. The game just has to look good. Or scary actually, whether that requires realistic graphics or not. If pre-baked solutions will do a better job achieving that, we should use those instead. Yet it's so attractive to keep trying realtime G.I. and as the hardware keeps getting faster and faster, we might be able to upscale the current solutions to better looking, more accurate versions...

So, I can't decide really. Which isn't good, because such techniques have a big impact on the end results. Each time we jump to another G.I. system, the looks of the rooms we did so far will (completely) change, and have to be tuned again. "Fortunately" we didn't make dozens of rooms yet, but at some point we have to decide and trust the technique will still look ok X years later when the game is supposed to be finished. It sucks to have multiple options.